Penyelidikan menunjukkan bahawa peniaga pilihan binari di Burkina Faso sentiasa mencari pasaran yang menguntungkan. Peniaga hari ini memilih untuk pilihan binari berdagang untuk membuat wang mudah dengan kelajuan yang pantas. Peniaga binari dari Burkina Faso boleh melabur dengan mudah dalam aset asas kerana kerajaan tidak mengenakan undang-undang. Negara membenarkan semua jenis peniaga berdagang. Oleh itu, peniaga perlu berhati-hati dengan siapa mereka berdagang. Jika harga dan kadar naik, peniaga berkemungkinan besar akan mendapat keuntungan yang besar, dan jika ia turun, peniaga juga perlu melalui keperitan dan keperitan kerugian yang melampau.

Di sini dalam artikel ini, kami akan menghuraikan beberapa langkah asas dan mudah di mana anda boleh dengan mudah memulakan perjalanan perdagangan anda.

(Amaran Risiko: Modal anda boleh berisiko.)

Panduan langkah demi langkah untuk berdagang Pilihan Perduaan di Burkina Faso

Apa yang anda akan baca dalam Post ini

Keseluruhan proses ini sangat mudah difahami dan lebih mudah untuk berdagang. Apa yang perlu peniaga lakukan ialah membuat perancangan yang betul untuk strategi terbaik yang berkaitan dengan pasaran sedia ada.

Walaupun strategi yang sempurna tidak menjamin bahawa peniaga tidak perlu menghadapi sebarang kerugian, kerugian adalah bahagian penting dalam perdagangan; maka adalah lebih bijak jika peniaga cuba menerima kerugian dengan ikhlas sebagaimana mereka menikmati keuntungan mereka. Tetapi, jika anda mengikuti langkah-langkah yang akan kami huraikan, maka ada kemungkinan anda akan mengatasi jumlah kerugian dengan mudah.

#1 Pilih broker tersedia yang menawarkan perkhidmatan di Burkina Faso

100+ Pasaran

- Min. deposit $10

- tunjuk cara $10,000

- Platform profesional

- Keuntungan tinggi sehingga 95%

- Pengeluaran cepat

- Isyarat

100+ Pasaran

- Menerima pelanggan antarabangsa

- Bayaran tinggi 95%+

- Platform profesional

- Deposit cepat

- Perdagangan Sosial

- Bonus percuma

250+ Pasaran

- Pilihan kripto

- Pelbagai kaedah pembayaran

- Keuntungan tinggi sehingga 88%+

- Antara muka mesra pengguna

- Sokongan peribadi

- Pendaftaran pantas

100+ Pasaran

- Min. deposit $10

- tunjuk cara $10,000

- Platform profesional

- Keuntungan tinggi sehingga 95%

- Pengeluaran cepat

- Isyarat

daripada $10

(Amaran risiko: Dagangan adalah berisiko)

100+ Pasaran

- Menerima pelanggan antarabangsa

- Bayaran tinggi 95%+

- Platform profesional

- Deposit cepat

- Perdagangan Sosial

- Bonus percuma

daripada $50

(Amaran risiko: Dagangan adalah berisiko)

250+ Pasaran

- Pilihan kripto

- Pelbagai kaedah pembayaran

- Keuntungan tinggi sehingga 88%+

- Antara muka mesra pengguna

- Sokongan peribadi

- Pendaftaran pantas

daripada $ 10

(Amaran risiko: Modal anda boleh berisiko)

Tanpa menganalisis broker dan tidak melakukan penyelidikan yang betul kadangkala boleh membawa kepada malapetaka dan membawa kerugian yang teruk. Pasaran perdagangan binari adalah mendalam, dan terdapat banyak broker yang menyediakan perkhidmatan. Adalah menjadi tanggungjawab peniaga untuk memilih broker yang tulen dan berdaftar sebelum membuat pelaburan.

Disebabkan oleh perkembangan luas perdagangan binari, pasaran dibanjiri dengan broker palsu. Oleh itu, ia sentiasa dinasihatkan oleh kami bahawa pedagang mesti sentiasa menyelidik dan memperoleh pengetahuan yang betul tentang ketulenan broker.

Berdagang dengan broker tertentu tidak semestinya perlu; adalah penting untuk diingat bahawa semua broker adalah unik, dan kedua-duanya tidak sempurna. Terdapat peluang untuk pedagang bekerja dengan berbilang broker mengikut keutamaan mereka. Oleh itu, jangan teragak-agak untuk berdagang dengan pedagang yang berbeza apabila peluang sedemikian disediakan.

1. Quotex

Quotex ialah platform moden yang menyediakan lebih daripada 400 aset menjadikannya paling boleh dipercayai daripada semua broker. Ia adalah salah satu platform sedemikian yang dengan cepat mendapat peminat yang besar dalam tempoh yang singkat.

Melalui artikel ini, kami telah mengambil inisiatif untuk menghuraikan beberapa cirinya yang akan memberikan anda maklumat yang diperlukan untuk membuat keputusan dengan sewajarnya:

- Keseluruhan proses deposit dan pengeluaran mudah dibuat dengan pelaburan minimum $10.

- Terdapat pelbagai cara pembayaran yang boleh anda lakukan dengan mudah di platform ini. Bermula daripada kad dan mata wang kripto kepada bank dan e-platform.

- Setiap kali peniaga mendaftar untuk akaun langsung baharu, bonus tambahan 30% akan diberikan kepada anda.

(Amaran Risiko: Modal anda boleh berisiko.)

2. Pocket Option

Pilihan poket adalah salah satu pilihan perdagangan binari dan salah satu broker yang paling boleh dipercayai dalam pasaran semasa. Broker dagangan dalam talian ini menyediakan pedagangnya dengan tawaran terbaik untuk berdagang. Pocket Option terkenal dengan alat utama, penunjuk teknikal dan alat dagangan lain yang boleh mendapat manfaat daripada peniaga binari. Ini adalah platform yang mudah difahami dan bermanfaat untuk kedua-dua pemula dan juga pedagang yang berpengalaman.

Selain daripada itu, dagangan Pocket Option juga memberikan manfaat ini kepada peniaganya.

- Broker ini menawarkan pilihan perdagangan yang fleksibel dan diterima oleh semua pedagang antarabangsa

- Platform dalam talian ini mempunyai lebih daripada 20,000 pengguna aktif setiap hari

- Platform dagangan dalam talian ini mempunyai proses pendaftaran yang sangat mudah yang boleh digunakan oleh semua jenis pedagang.

(Amaran Risiko: Modal anda boleh berisiko.)

3. Focus Option

Jika dibandingkan dengan brokernya yang lain, Focus Option adalah broker yang agak baru. Ia membolehkan hampir semua orang membuka akaun dengan pilihan poket. Ia adalah salah satu pilihan binari dan juga platform dagangan berasaskan CFD. Platform dagangan ini adalah platform yang mudah dan mudah digunakan yang telah mendapat populariti dan menjadi kegemaran pedagang. Memandangkan ia masih tidak berada di bawah mana-mana peraturan antarabangsa, kesahihannya sering dipersoalkan.

Beberapa cirinya akan kami huraikan di sini dalam artikel ini:

- Platform ini menyediakan lebih daripada 80 mata wang kripto kepada pedagangnya.

- Peniaga mempunyai peluang untuk memproses pengeluaran mereka dalam masa 24 jam.

- Jumlah pelaburan terendah di sini ialah $10.

(Amaran Risiko: Modal anda boleh berisiko.)

#2 Daftar untuk akaun dagangan

Kami telah memudahkan proses pendaftaran dengan menghuraikan beberapa langkah asas.

- Pastikan broker yang anda ingin pergi dan pilih broker pilihan anda.

- Lawati laman web rasmi broker pilihan anda.

- Klik pada butang pendaftaran yang boleh dilihat pada halaman web

- Seterusnya, peniaga perlu memberikan semua butiran yang diperlukan yang akan diminta daripada anda.

- Langkah terakhir melibatkan anda menyemak silang semua butiran dan mengetik pada tab 'serahkan'.

(Amaran Risiko: Modal anda boleh berisiko.)

#3 Gunakan akaun dagangan demo

Akaun demo adalah, secara praktikalnya, akaun amalan. Apabila seorang pemula pada mulanya memulakan perjalanan perdagangannya, melabur dalam jumlah yang besar mungkin berisiko untuk mereka. Akaun demo adalah alternatif terbaik untuk memulakan dagangan sebagai pemula. Pedagang dengan sedikit pengalaman boleh bermula dengan akaun demo. Seseorang boleh dengan mudah memperkenalkan diri kepada pesanan pasaran dan dinamik melalui akaun Demo.

Tetapi pedagang yang berpengalaman boleh memulakan dagangan langsung dengan mudah untuk mendapatkan keuntungan yang disyorkan dan berkembang.

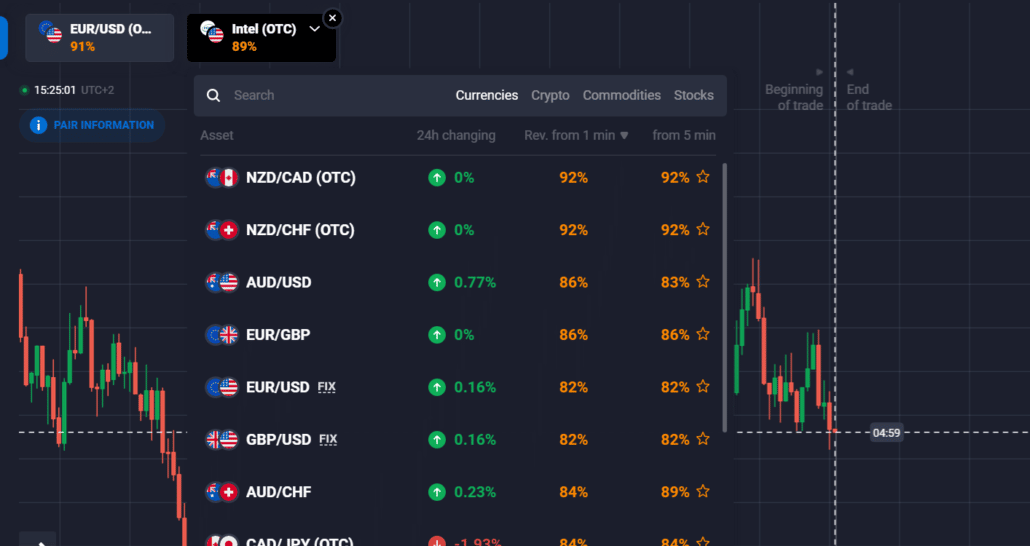

#4 Pilih aset untuk berdagang

Peniaga boleh memulakan dagangan melalui pilihan binari selepas memilih aset asas, dan peniaga boleh memulakan perjalanan dagangannya dengan mudah. Peniaga biasanya mencari aset asas melalui platform dagangan dalam talian. Mereka memilih aset yang sesuai untuk berdagang dan membantu peniaga binari dalam talian dalam meningkatkan keuntungan dagangan mereka.

(Amaran Risiko: Modal anda boleh berisiko.)

#5 Buat analisis yang betul

Pada masa ini, ia mesti sudah diketahui bahawa perdagangan Binari tidak dapat diramalkan dan dinamik. Mengekalkan kalendar ekonomi sentiasa menjadi asas yang baik di mana pedagang boleh membuat spekulasi pergerakan pasaran dengan mudah, kerana tidak ada prosedur yang sempurna untuk membuat spekulasi arah di mana kadar naik atau turun. Dalam keadaan sedemikian, anda mesti memilih pilihan yang paling berdaya maju yang sesuai dengan pilihan anda, bergantung pada rangka masa dan maklumat aset.

#6 Letakkan dagangan anda

Setelah membuat analisis yang diperlukan, sudah tiba masanya untuk meletakkan perdagangan dalam pilihan binari; langkah seterusnya memerlukan anda memilih mana-mana aset asas yang tersedia. Sentiasa ingat bahawa senarai akan mendedahkan peratusan pembayaran terlebih dahulu.

#7 Tunggu keputusan

Selepas pilihan perdagangan binari tamat tempoh, peniaga boleh mengetahui keputusan yang mungkin. Aset asas dan harganya tidak pernah ditetapkan; mereka sentiasa berbeza mengikut masa dan situasi. Sebab penting untuk keuntungan yang disyorkan adalah jika spekulasi pedagang sepadan dengan baik.

Tetapi sebaliknya, jika spekulasi tidak sepadan, peniaga terpaksa menghadapi kerugian yang teruk. Oleh itu, adalah perlu untuk mempunyai analisis pasaran yang betul kerana ia adalah salah satu sebab utama dalam menggalakkan peniaga mencari aset sebenar dan masa yang penting untuk meletakkan perdagangan pilihan binari mereka.

(Amaran Risiko: Modal anda boleh berisiko.)

Apakah Pilihan Perduaan?

Pilihan binari tidak lain adalah apa yang kita kenal sebagai terbitan aset. Seorang peniaga sering berhadapan dengan dua hasil: sama ada keuntungan besar atau tiada langsung. Tambahan pula, ia mempunyai tarikh luput yang ditetapkan.

Untuk memperoleh keuntungan yang besar, anda hanya perlu membuat ramalan yang tepat tentang hasil dalam tempoh yang dispekulasi. Anda sama ada akan memperoleh keuntungan yang besar, atau anda mungkin kehilangan semua yang anda telah laburkan dalam perdagangan yang berbeza.

Tetapi selalu dinasihatkan bahawa sebelum membuat kesimpulan, anda membuat analisis terperinci tentang pasaran dan broker dengan siapa anda akan berdagang.

Adakah Perdagangan Perduaan sah di Burkina Faso?

Ya, perdagangan binari semuanya dibenarkan dan sah di Burkina Faso. Telah diperhatikan bahawa hampir semua undang-undang dan peraturan yang dikenakan oleh kerajaan Burkina Faso adalah mesra kepada pilihan perdagangan binari. Peniaga di sini ditawarkan peluang untuk mengeluarkan pelaburan mereka pada bila-bila masa dan bila-bila masa.

Satu-satunya faktor yang penting bagi pedagang dan broker untuk berhati-hati ialah ketulenan dan ketulenan mereka. Negara ini tidak mengehadkan broker yang menyediakan perkhidmatan tulen kepada pedagangnya. Ketelusan harus dikekalkan pada setiap masa.

(Amaran Risiko: Modal anda boleh berisiko.)

Kaedah pembayaran untuk pedagang di Burkina Faso

Pedagang Burkina Faso mempunyai pelbagai pilihan apabila mereka ingin memindahkan dana atau melabur dalam aset asas. Kebanyakannya semua broker yang bekerja di Burkina Faso menyediakan kaedah pembayaran asas yang boleh digunakan oleh pedagang Burkina Faso untuk berdagang pilihan binari. Di sini dalam artikel ini, kami akan menyediakan beberapa sumber yang paling boleh dipercayai dan mudah digunakan untuk membuat pembayaran anda.

Pindahan bank

Telah disedari bahawa pindahan Bank adalah cara pembayaran yang paling mudah dan paling kos efektif. Menjadi kaedah tradisional untuk membuat pembayaran. Pedagang di Burkina Faso mempunyai akses untuk membiayai akaun dagangan langsung mereka melalui pemindahan bank yang mudah.

Dompet elektronik

Dompet elektronik, yang juga dikenali sebagai e-dompet, merupakan satu lagi sumber untuk membuat pembayaran kami. Banyak pilihan dompet elektronik tersedia untuk pedagang Burkina Faso untuk membiayai akaun mereka. Pembayaran elektronik ialah kaedah pembayaran utama untuk pedagang di Burkina Faso.

Telah diperhatikan bahawa dompet elektronik menyediakan mod yang cepat dan paling mudah untuk berdagang pilihan binari. Pilihan MasterCard juga tersedia untuk pedagang Burkina Faso untuk membuat proses deposit dan pengeluaran dengan cepat dan bijak.

Pembayaran kad

Pedagang Burkina Faso boleh menggunakan kad berkaitan bank mereka dengan mudah untuk membuat deposit dan pengeluaran besar pada pilihan perdagangan binari. Menjadi salah satu bentuk pembayaran tertua. Ia dipercayai di seluruh dunia. Kad debit dan kredit ialah mod paling mudah untuk membuat pembayaran. Peniaga boleh mengaksesnya dari mana-mana bahagian dunia.

Bagaimana untuk deposit dan pengeluaran?

Prosesnya mudah; peniaga boleh membuat deposit dengan mudah melalui pelbagai mata wang. Mendepositkan sebarang jumlah dana biasanya percuma dan tidak memerlukan sebarang caj tambahan. Peniaga perlu menyemak dengan bank untuk mendepositkan. Perlu diingat bahawa broker tidak akan bertanggungjawab untuk pelaburan anda.

Proses pengeluaran juga mudah, walaupun setiap broker mempunyai kaedah yang berbeza untuk mengeluarkan dana. Peniaga mesti berhati-hati dengan caj tersembunyi yang sering ditolak. Secara amnya, e-mel dijana selepas pengeluaran selesai.

Kebaikan dan keburukan perdagangan Opsyen Binari di Burkina Faso

Katakan peniaga memerlukan penjelasan sama ada untuk berdagang pilihan binari di Burkina Faso atau tidak. Kemudian teruskan membaca artikel itu, kerana kami akan menghuraikan secara terperinci beberapa kebaikan dan keburukannya:

Kelebihan Dagangan Binari ialah:

- Platform ini sangat asas, dan ia mudah difahami oleh peniaga. Peniaga di semua peringkat mendapati ia menyeronokkan untuk berdagang melalui binari.

- Salah satu ciri luar biasa binari adalah pembayaran yang cepat dan berkesan.

- Ciri tambahan ialah risiko boleh dikawal dan diuruskan dengan berkesan hanya dengan spekulasi terdahulu.

Keburukan Perdagangan Binari ialah:

- Oleh kerana perdagangan pilihan binari dalam trend. Selalunya seorang peniaga perlu berhubung dengan peniaga yang tidak dikawal yang boleh menipu dan menipunya tentang nasib baiknya.

- Analisis terperinci pasaran perlu dilakukan, yang selalunya menjadi terlalu memakan masa.

- Seorang peniaga selalunya perlu mencari peluang yang lebih tinggi untuk risiko yang lebih besar.

Risiko perdagangan Opsyen Binari di Burkina Faso

Peniaga yang berdagang dalam binari selalunya berisiko kurang daripada 11TP92Untuk jumlah pelaburan mereka. The risiko terlibat dengan pilihan perdagangan binari adalah kecil, tetapi ia tidak dapat dielakkan. Peniaga sering cenderung untuk menjadi kaya dengan cepat dengan bantuan perdagangan pilihan binari.

- Selalunya, peniaga perlu menunggu sehingga perdagangan tamat tempoh dan keputusan diumumkan. Oleh itu, semasa kami menganalisis, kami mendapati bahawa perdagangan binari selalunya boleh memakan masa untuk pedagang.

- Salah satu faktor yang paling berisiko ialah pasaran dagangan binari tidak dikawal; terdapat pelbagai jenis broker dan pedagang yang tersedia di platform ini. Selalunya terdapat kebarangkalian peniaga terlibat dalam amalan yang tidak bertanggungjawab.

Burkina Faso ialah sebuah negara terkurung daratan yang terletak di Afrika Barat. Ia bersempadan dengan negara-negara berikut:

- Mali: Burkina Faso berkongsi sempadan utaranya dengan Mali. Sempadan antara kedua-dua negara berjalan di sepanjang sebahagian besar sempadan utara Burkina Faso.

- Niger: Burkina Faso berkongsi sempadan timur lautnya dengan Niger. Sempadan antara Burkina Faso dan Niger terletak di wilayah Sahel.

- Benin: Burkina Faso berkongsi sempadan tenggara dengan Benin. Sempadan merentasi bahagian selatan Burkina Faso.

- Togo: Burkina Faso berkongsi sempadan selatannya dengan Togo. Sempadan antara Burkina Faso dan Togo terletak di bahagian barat daya Burkina Faso.

- Ghana: Burkina Faso berkongsi sempadan barat dayanya dengan Ghana. Sempadan antara Burkina Faso dan Ghana terletak di bahagian barat daya Burkina Faso.

- Côte d'Ivoire (Pantai Gading): Burkina Faso berkongsi sempadan baratnya dengan Côte d'Ivoire. Sempadan antara kedua-dua negara terletak di bahagian barat Burkina Faso.

Negara berhampiran Burkina Faso

Jika anda tinggal atau bekerja di mana-mana negara ini, anda mungkin berminat dengan maklumat mengenai perdagangan pilihan binari di negara ini juga. Ikuti pautan di bawah untuk menerima maklumat daripada kami.

Ini adalah negara yang bersempadan dengan Burkina Faso:

(Amaran Risiko: Modal anda boleh berisiko.)

Kesimpulan: Perdagangan Opsyen Binari boleh didapati di Burkina Faso

Setelah membaca keseluruhan artikel, anda kini mesti sedar tentang semua pasang surut yang terlibat dalam perdagangan binari. Ini adalah cadangan kepada semua pedagang bahawa sebelum anda melabur dalam perdagangan, anda mesti sentiasa sedar tentang jumlah pelaburan yang anda bersedia untuk mengambil risiko dalam transaksi tertentu. Perdagangan binari mungkin salah satu cara yang paling mudah dan terpantas untuk menjana kekayaan, tetapi ia juga boleh menjadi satu penipuan. Oleh itu, peniaga sentiasa diberi amaran untuk berwaspada semasa melabur dalam sebarang jenis aset dan broker tanpa analisis terlebih dahulu.

Soalan Lazim (Soalan Lazim) tentang Pilihan Perduaan di Burkina Faso:

Adakah perdagangan pilihan binari di Burkina Faso menawarkan pedagang cara cepat untuk membuat wang?

Ya, pilihan perdagangan binari adalah salah satu bentuk perdagangan sebegitu di mana pulangan yang tinggi, dan ia pastinya salah satu cara paling mudah untuk membuat wang.

Di mana saya boleh berdagang pilihan binari di Burkina Faso?

Pelbagai broker yang menyediakan perkhidmatan tulen di Burkina Faso adalah yang telah kami sebutkan dalam artikel. Namun, jika mana-mana peniaga ingin berdagang dengan broker yang berbeza, dia pasti boleh meneruskannya.

Adakah perdagangan pilihan binari berisiko di Burkina Faso?

Ya, sememangnya berisiko untuk berdagang binari di Burkina Faso. Risiko sentiasa menjadi sebahagian daripada perdagangan, tidak kira seberapa dekat spekulasi itu. Perdagangan binari sentiasa tertakluk kepada risiko.