Research shows that binary options traders in Burkina Faso are always in search of a lucrative market. These days’ traders are opting for binary option trade to make some easy money at a rapid speed. The binary traders from Burkina Faso can easily invest in the underlying assets as the government imposes no laws. The country allows all kinds of traders to trade. Hence, traders need to be careful whom they are trading with. If the prices and the rates go up, the traders will likely have a huge amount of profits, and if it goes down, the trader equally has to go through the pangs and agony of extreme losses.

Here in this article, we’ll be elaborating on some basic and easy steps through which you can easily initiate your journey of trading.

(Risk Warning: Your capital can be at risk.)

A step-by-step guide to trade Binary Options in Burkina Faso

What you will read in this Post

The entire process is very easy to understand and even easier to trade with. All that the trader needs to do is make proper plans for the best strategies related to the existing market.

Even though perfect strategies don’t guarantee that the trader will not have to confront any losses, losses are an essential part of trading; hence it will be wiser if the trader tries to accept losses heartedly just as they enjoy their profits. But, if you follow the steps that we are about to elaborate on, then there are chances that you will overcome the number of losses very easily.

#1 Pick an available broker that offers services in Burkina Faso

100+ Markets

- Min. deposit $10

- $10,000 demo

- Professional platform

- High profit up to 95%

- Fast withdrawals

- Signals

100+ Markets

- Accepts international clients

- High payouts 95%+

- Professional platform

- Fast deposits

- Social Trading

- Free bonuses

250+ Markets

- Crypto options

- Multiple payment methods

- High profit up to 88%+

- User-friendly interface

- Personal support

- Fast registration

100+ Markets

- Min. deposit $10

- $10,000 demo

- Professional platform

- High profit up to 95%

- Fast withdrawals

- Signals

from $10

(Risk warning: Trading is risky)

100+ Markets

- Accepts international clients

- High payouts 95%+

- Professional platform

- Fast deposits

- Social Trading

- Free bonuses

from $50

(Risk warning: Trading is risky)

250+ Markets

- Crypto options

- Multiple payment methods

- High profit up to 88%+

- User-friendly interface

- Personal support

- Fast registration

from $ 10

(Risk warning: Your capital can be at risk)

Without analyzing a broker and not doing proper research can sometimes lead to havoc and lead severe losses. The binary trading market is profound, and there are numerous brokers providing services. It is the responsibility of the trader to select a genuine and registered broker before making investments.

Due to the vast expansion of binary trade, the market is flooded with fake brokers. Hence, it is always advised by us that traders must always research and acquire proper knowledge about the broker’s authenticity.

Trading with a particular broker is not always necessary; it is essential to remember that all brokers are unique, and neither are they perfect. There are opportunities for traders to work with multiple brokers according to their preferences. Hence, don’t hesitate to trade with different traders when such opportunities are provided.

1. Quotex

Quotex is a modern platform that provides more than 400 assets making it the most trustworthy of all the brokers. It is one such platform that has quickly gained an immense fanbase in a short period.

Through this article, we have taken the initiative to elaborate on some of its features that will provide you with the necessary information to make a decision accordingly:

- The entire process of deposits and withdrawals is easily made with a bare minimum investment of $10.

- There are various modes of payments through which you can easily make on this platform. Ranging from cards and cryptocurrencies to banks and e-platforms.

- Every time the trader register for a new live account, an additional bonus of 30% will be provided to you.

(Risk Warning: Your capital can be at risk.)

2. Pocket Option

The pocket option is one of the binary trading options and one of the most reliable brokers in the current market. This online trading broker provides its traders with the best deals to trade with. Pocket Option is known for its leading tools, technical indicators, and other trading tools that binary traders can benefit from. This a platform that is easy to understand and is beneficial for both beginners as well the experienced traders.

Other than that, Pocket Option trading also provides these benefits to its traders.

- This broker offers flexible trading options and is accepted by all international traders

- This online platform has more than 20,000 active daily users

- This online trading platform has an extremely easy signup process that can be used by traders of all types.

(Risk Warning: Your capital can be at risk.)

3. Focus Option

When compared to its other broker, Focus Option is a comparatively fresh broker. It allows almost everyone to open an account with pocket options. It is one of the binary options and also a CFD-based trading platform. This trading platform is a convenient and easy-to-use platform that has gained popularity and become the trader’s favorite. Since it’s still not under any international regulations, its authenticity is often questioned.

A few of its features will be elaborated on by us here in this article:

- The platform provides more than 80 cryptocurrencies to its traders.

- Traders have the opportunity to process their withdrawals within 24 hours.

- The lowest amount of investment here is $10.

(Risk Warning: Your capital can be at risk.)

#2 Sign up for a trading account

We have made the signing-up process easier by elaborating on a few basic steps.

- Make sure which broker you want to go for and select the broker of your preference.

- Visit the official website of your chosen broker.

- Click on the signup button that can be seen on the web page

- Next, the trader needs to provide all the required details that will be asked of you.

- The final step involves you cross-checking all the details and tapping on the ‘submit’ tab.

(Risk Warning: Your capital can be at risk.)

#3 Use a demo trading account

A demo account is, practically speaking, a practice account. When a beginner initially starts his trading journey, investing huge amounts might be risky for them. Demo accounts are the best alternative to initiate trading as a beginner. A trader with little experience can begin with a demo account. One can easily introduce oneself to market orders and dynamics through Demo accounts.

But an experienced trader can easily begin live trading to gain recommendable profits and expand.

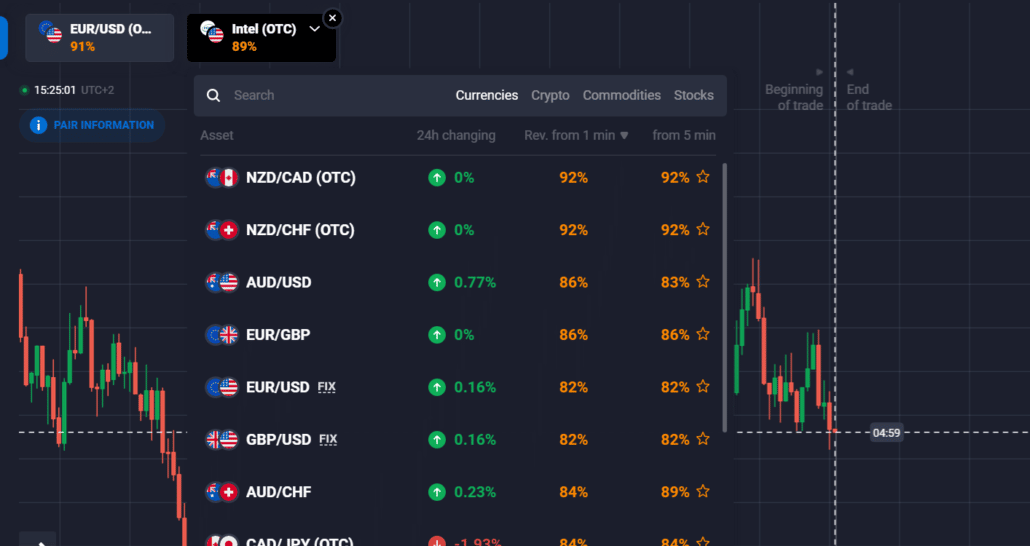

#4 Pick an asset to trade

The trader can initiate trading through binary options after choosing an underlying asset, and the trader can easily begin his trading journey. Traders usually look for underlying assets through the online trading platform. They choose an appropriate asset to trade with and aid online binary traders in enhancing their trading profitability.

(Risk Warning: Your capital can be at risk.)

#5 Make a proper analysis

By now, it must already be known that Binary trading is unpredictable and dynamic. Maintaining an economic calendar always makes a good base through which the traders can easily speculate the market movement, as there is no perfect procedure to speculate the direction in which the rates are rising or going down. In such circumstances, you must choose the most viable option that suits your preferences, depending on the time frame and asset information.

#6 Place your trade

Having made the necessary analysis, it’s time to place the trade in the binary option; the next step will require you to choose any available underlying assets. Always remember that the list will reveal the payout percentage beforehand.

#7 Wait for the results

After the binary trading option expires, the trader can know the possible results. The underlying assets and their prices are never fixed; they always vary along with time and situations. The important reason for recommendable profits is if the speculations of the traders’ match well.

But on the other hand, if the speculations don’t match, the trader has to face severe losses. Therefore, it is necessary to have a proper analysis of the market because it is one of the key reasons in encouraging traders to search for a real asset and timing essential to place their binary options trade.

(Risk Warning: Your capital can be at risk.)

What is a Binary Option?

The binary option is nothing but what we popularly known as an asset derivate. A trader is often confronted with two outcomes: either a huge profit or nothing at all. Furthermore, it has an established expiration date.

To make considerable profits, all you need to do is, make accurate predictions about the outcomes within a speculated period. You will either earn huge profits, or you may lose everything you have invested in the different trade.

But it is always advisable that before jumping to conclusions, you make a detailed analysis of the market and the brokers with whom you are about to trade.

Is Binary Trading legal in Burkina Faso?

Yes, binary trading is all allowed and legal in Burkina Faso. It has been observed that almost all of the laws and regulations that the Burkina Faso government imposes are friendly to binary trading options. The traders here are offered the opportunity to withdraw their investments anytime and anytime.

The only factors essential for the trader and the broker to be watchful about are their authenticity and genuineness. The country puts no limitations on the brokers who provide genuine service to its traders. Transparency should be maintained at all times.

(Risk Warning: Your capital can be at risk.)

Payment methods for traders in Burkina Faso

Traders of Burkina Faso have multiple options when they want to transfer funds or invest in the underlying assets. Mostly all brokers who work in Burkina Faso provide basic payment methods which Burkina Faso traders can use to trade binary options. Here in this article, we’ll provide some of the most reliable and easy-to-use sources to make your payments.

Bank transfers

It has been noticed that Bank transfers are the easiest and most cost-effective modes of payment. Being a traditional method of making payments. Traders in Burkina Faso have access to funding their live trading accounts through easy bank transfers.

Electronic wallet

The electronic wallet, also popularly known as an e-wallet, is another source of making our payments. Many electronic wallet options are available to Burkina Faso traders to fund their accounts. Electronic payments are a leading payment method for traders in Burkina Faso.

It has been observed that electronic wallets provide a fast and easiest mode to trade binary options. The option of MasterCard is also available for Burkina Faso traders to make the deposit and withdrawal process rapid and smart.

Card payments

Burkina Faso traders can easily use their bank-linked cards to make large deposits and withdrawals on binary trading options. Being one of the oldest forms of making payments. It is trusted all over the world. Debit and credit cards are the easiest modes to make payments. Traders can access it from any part of the world.

How to deposit and withdraw?

The process is simple; the traders can easily deposit through various currencies. Depositing any amount of funds is usually free and doesn’t require any extra charges. The trader needs to check with the bank to deposit. It must be remembered that the broker will not be accountable for your investment.

The withdrawal process is also simple, though every broker has different methods to withdraw funds. The trader must be careful about the hidden charges that are often deducted. Generally, an email is generated after the withdrawal is completed.

Pros and cons of Binary Options trading in Burkina Faso

Suppose the trader needs clarification about whether to trade binary options in Burkina Faso or not. Then keep reading the article, for we will be elaborating in detail on some of its pros as well as cons:

The Pros of Binary Trading are:

- The platform is very basic, and it’s easily understandable for the trader. Traders at all levels find it enjoyable to trade through binary.

- One of the extraordinary features of binary is its quick and effective payouts.

- An additional feature is that the risks can be effectively regulated and managed simply by prior speculations.

The Cons of Binary Trading are:

- As Binary options trading is in trend. Often a trader has to come in contact with an unregulated trader who can scam and cheat him of his good fortune.

- A detailed analysis of the market needs to be done, which often becomes excessively time-consuming.

- A trader often has to come across higher chances of greater risks.

Risks of Binary Options trading in Burkina Faso

The trader who trades in binary often risks less than 1%of their total investment. The risks involved with binary trading options are minor, but they are not unavoidable. Traders often incline to get rich quickly with the help of binary options trading.

- Often, the trader has to wait for the trade to expire and for the results to be announced. Thus, as we analyze, we find that binary trading can often be time-consuming for the trader.

- One of the riskiest factors is that the binary trading market is not regulated; there are all sorts of brokers and traders available on this platform. There often lies a probability of the trader being engaged in unscrupulous practices.

Burkina Faso is a landlocked country located in West Africa. It is bordered by the following countries:

- Mali: Burkina Faso shares its northern border with Mali. The border between the two countries runs along a significant portion of Burkina Faso’s northern boundary.

- Niger: Burkina Faso shares its northeastern border with Niger. The border between Burkina Faso and Niger is located in the Sahel region.

- Benin: Burkina Faso shares its southeastern border with Benin. The border runs through the southern part of Burkina Faso.

- Togo: Burkina Faso shares its southern border with Togo. The border between Burkina Faso and Togo is located in the southwestern part of Burkina Faso.

- Ghana: Burkina Faso shares its southwestern border with Ghana. The border between Burkina Faso and Ghana is located in the southwestern part of Burkina Faso.

- Côte d’Ivoire (Ivory Coast): Burkina Faso shares its western border with Côte d’Ivoire. The border between the two countries is located in the western part of Burkina Faso.

Countries near Burkina Faso

If you live or work in any of these countries, you may be interested in the information on binary options trading in these countries, too. Follow the link below to receive the information from us.

These are the countries that border Burkina Faso:

(Risk Warning: Your capital can be at risk.)

Conclusion: Binary Options trading is available in Burkina Faso

Having read the entire article, you must now be aware of all the ups and downs involved in binary trading. It’s a suggestion to all traders that before you invest in trading, you must always be aware of the invested amount you are prepared to risk in a particular transaction. Binary trading might be one of the easiest and fastest modes of making a great fortune, but it can also be a scam. Hence, traders are always warned to be vigilant while investing in any sort of assets and brokers without prior analysis.

Frequently Asked Questions (FAQs) about Binary Options in Burkina Faso:

Does binary options trading in Burkina Faso offer traders a quick way to make money?

Yes, binary trading options are one such form of trading where the returns are high, and it is certainly one of the easiest ways of making money.

Where can I trade binary options in Burkina Faso?

The various brokers who provide genuine services in Burkina Faso are the ones that we have already mentioned in the article. Still, if any trader wants to trade with a different broker, he can surely go ahead.

Are binary options trading risky in Burkina Faso?

Yes, it certainly is risky to trade binary in Burkina Faso. Risks are always a part of trading, no matter how close the speculation might be. Binary trading is always subject to risks.