Sono diversi i broker che ora assegnano i bonus di opzioni binarie senza deposito, e li esamineremo uno dopo l'altro per far emergere alcuni punti speciali riguardo a questi bonus.

In questo confronto, ti presenteremo il 4 migliori broker binari per i bonus senza deposito. Puoi ottenere un bonus gratuito fino a $100. Segui e leggi l'articolo qui sotto!

Per ottenere un bonus di deposito gratuito di $ 100, usa il codice promozionale "BOFREE” per i broker di opzioni binarie elencati di seguito.

Guarda il confronto dei 4 migliori broker di opzioni binarie con bonus senza deposito:

Broker:

Bonus senza deposito:

vantaggi:

Account:

$ 100

- Conto demo gratuito

- Registrazione veloce

- Alto rendimento 90%+

- Risorse multiple

- Fai trading solo con centesimi

(Avviso di rischio: il tuo capitale può essere a rischio)

$ 100

- Conto demo gratuito

- Supporto video

- Alto rendimento

- Registrazione veloce

(Avviso di rischio: il tuo capitale può essere a rischio)

$ 100

- Il meglio per i bonus

- Supporto personale

- Trading di criptovalute

- CFD

(Avviso di rischio: il tuo capitale può essere a rischio)

$ 100

- Fai trading con i centesimi

- Registrazione veloce

- Alto rendimento

- Piattaforma innovativa

(Avviso di rischio: il tuo capitale può essere a rischio)

Elenco dei 4 migliori broker di opzioni binarie con bonus senza deposito:

Nella nostra lista su questo sito web, vedrai ogni broker recensito in base a condizioni e caratteristiche. Tutti i broker in questo confronto sono leggermente diversi, ma nel complesso hanno condizioni simili! Inoltre, puoi controllare ogni recensione in dettaglio.

Guarda il nostro video su come utilizzare il bonus senza deposito:

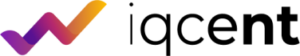

1.IQcent

IQcent è la nostra prima scelta per l'utilizzo di un bonus di deposito gratuito per le opzioni binarie. Sulla piattaforma, puoi scambiare 100 asset diversi a tua scelta con un rendimento elevato di 90%+. La piattaforma è disponibile per qualsiasi dispositivo.

Ottieni un bonus senza deposito $ 100 gratuito subito dopo la registrazione. Inoltre, puoi utilizzare un account demo per verificarne prima le funzionalità.

Cosa c'è di così speciale in IQcent?

L'importo minimo di negoziazione è di pochi centesimi e il deposito minimo è di soli $ 20. Questo broker è adatto a piccoli e grandi investimenti!

- Bonus gratuito senza deposito di $ 100

- Oltre 100 asset da scambiare

- Trading 24 ore su 24, 7 giorni su 7

- Supporto personale

- Deposito minimo basso di $ 20

- Conto demo gratuito

- Importi minimi di negoziazione di pochi centesimi

- Copy trading disponibile

- Prelievi istantanei

- 10+ metodi di pagamento

(Usa il codice promozionale “BOFREE”)

2. VideForex

VideForex caratteristica speciale è il supporto video per ogni cliente. Scambia anche oltre 100 asset su questa piattaforma. Le condizioni sono simili a IQcent, ma qui devi depositare almeno $ 250 per fare trading live!

- Deposito minimo di $ 250

- Importo minimo di negoziazione di $ 1

- Supporto video 24 ore su 24, 7 giorni su 7

- 100+ risorse

- Esecuzione rapida delle operazioni

(Usa il codice promozionale “BOFREE”)

3. RaceOption

RaceOption è un altro broker che offre un bonus senza deposito $ 100 gratuito. Questa piattaforma è nota per le sue funzionalità di social trading professionale. Senza alcuna esperienza puoi copiare altri trader e realizzare profitti (ma anche perdite). 100 asset disponibili per il trading e un deposito minimo di $ 250!

- Commercio sociale

- Alto rendimento di 88%+

- $ 250 deposito minimo

- Prelievi veloci

- Bonus

(Usa il codice promozionale “BOFREE”)

4. Cent binario

BinaryCent è un broker esistente da molti anni ed è preferito dai trader che vogliono fare trading con piccole somme di denaro. Inizia a fare trading con un importo minimo di negoziazione di $ 0,10. Inoltre, viene offerto il copy trading.

- $ 250 deposito minimo

- Più di 15 metodi di pagamento

- Un importo minimo di negoziazione basso di $ 0,10

- Assistenza 24 ore su 24, 7 giorni su 7

- Bonus gratuiti

- Copia trading

(Usa il codice promozionale “BOFREE”)

Che cos'è un bonus opzioni binarie senza deposito? (bonus di benvenuto)

I bonus di opzioni binarie senza deposito sono bonus dati da broker di opzioni binarie ai trader, di solito depositati su un conto live, senza che il trader abbia preventivamente investito alcun capitale di trading sul conto. In altre parole, questi sono bonus di opzioni binarie che non richiedono alcun deposito precedente sul conto.

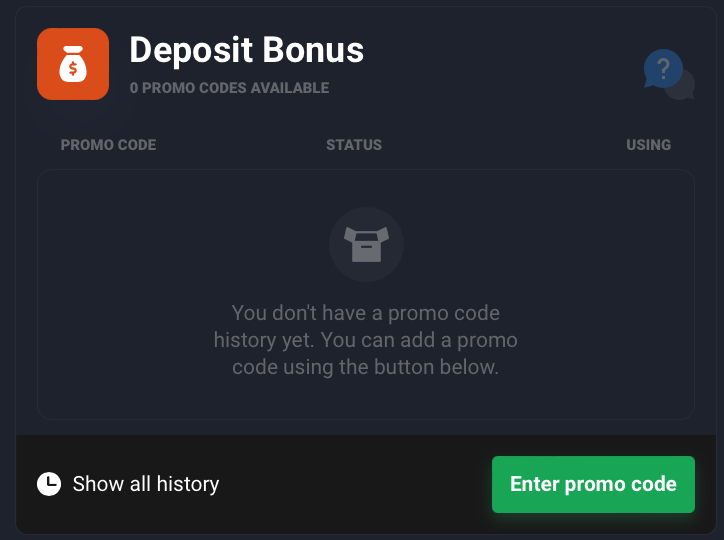

Il bonus senza deposito è completamente gratuito e offerto previa registrazione sulla piattaforma del broker. A volte viene offerto un codice promozionale per ottenere un bonus gratuito. I trader possono utilizzare questo bonus per fare trading gratuitamente. Dopo un certo periodo o turnover dell'importo del bonus, i trader possono ritirare il bonus senza deposito. Entreremo nei dettagli sulle solite condizioni in questo articolo.

(Usa il codice promozionale “BOFREE”)

Come ottenere un bonus Opzioni binarie senza deposito

Per ottenere il meglio da opzioni binarie bonus senza deposito (bonus di benvenuto), il trader deve dimenticare di effettuare qualsiasi prelievo del bonus e concentrarsi direttamente sull'utilizzo del bonus per scopi di trading. Il bonus senza deposito può essere negoziato utilizzando l'importo minimo di rischio per il trading. Ad esempio, un broker con un importo minimo di investimento compreso tra $5 e $10 è il più ideale per fare trading con un bonus di opzioni binarie senza deposito.

L'idea era quella di dare ai trader un po' di soldi per testare le acque del mercato delle opzioni binarie dal vivo, dare loro l'opportunità di costruirlo e quindi ritirare i profitti solo quando il trader ha generato un volume di scambio specifico per coprire il bonus e pagare dei bei soldi al broker. In sostanza, i bonus di opzioni binarie senza deposito sono diventati una fonte di rastrellamento della popolazione commerciale che è riluttante a iniziare l'attività di trading o ha conti attivi che sono stati lasciati a riposo.

Devi completare i seguenti passaggi per ottenere un bonus senza deposito:

- Iscriviti con un broker di opzioni binarie che offre questo tipo di bonus

- Compila i tuoi dati personali

- Conferma e verifica il tuo conto di trading

- Usa il bonus gratuito senza deposito per fare trading

- Ritira il bonus dopo aver completato le condizioni del bonus

(Usa il codice promozionale “BOFREE”)

Pro e contro del bonus opzioni binarie senza deposito

Offrendo un bonus senza deposito, il broker di opzioni binarie cerca di offrire al trader incentivi per fare trading e depositare più denaro sul conto di trading. Devi ricordare che niente è gratis al mondo. Nella tabella seguente, ti mostriamo tutti i vantaggi e gli svantaggi del bonus senza deposito:

vantaggi:

- Scambia gratuitamente con denaro reale

- Scopri come utilizzare denaro reale nel trading

- Possibilità di denaro gratuito

- Puoi provare ed errori utilizzando più account

Svantaggi:

- Devi soddisfare le condizioni del bonus per prelevare il denaro

- Potresti smettere di valutare i soldi veri nel trading

Condizioni del bonus senza deposito

Ci sono sempre termini e condizioni per l'utilizzo di un bonus gratuito senza deposito Trading di opzioni binarie. Dalla nostra esperienza, non è possibile ritirare il bonus direttamente dopo la registrazione. Il broker di opzioni binarie non ti darà soldi gratis per la registrazione.

Ci sono le condizioni per fare un certo turnover del bonus o il broker richiede un altro deposito. Le condizioni dei nostri broker consigliati sono le seguenti:

- Attiva il bonus gratuito senza deposito utilizzando il codice promozionale "BOFREE"

- Per prelevare il bonus senza deposito, devi effettuare il deposito minimo richiesto dal broker e un certo fatturato

- Il fatturato è calcolato da – 3x (deposito + bonus)

Ad esempio, effettui un deposito minimo di $ 250 e utilizzi un bonus senza deposito di $ 100. Ciò significa che devi fare un giro d'affari complessivo di $ 1.050 (3 x $ 350).

Puoi ritirare il bonus?

Sì, puoi ritirare il bonus, ma solo dopo aver completato le condizioni del bonus. Per ulteriori informazioni, puoi chiedere al broker il supporto per tutti i passaggi richiesti!

Le principali differenze tra bonus senza deposito e bonus con deposito

Famoso come il trading binario gratuito, i bonus senza deposito sono, ci sono anche altri bonus extra. Di seguito, spiegheremo la differenza rispetto a un tipico bonus di deposito.

Obbligo di deposito

Uno dei principali contrasti chiave per quanto riguarda un bonus sul deposito di trading binario è che è inequivocabilmente richiesto un deposito. Questa non è la situazione con il bonus binario senza deposito

Dimensione bonus

La dimensione della ricompensa comune per quanto riguarda il bonus senza deposito è compresa tra $5 – $50. Alcuni possono diventare più grandi, ma questi dovrebbero essere visti con un po' di allerta.

I bonus di deposito poi di nuovo possono raggiungere regolarmente tanto quanto $500 per conto del commerciante.

Importo fisso contro percentuale variabile

Come notato, l'idea delle opzioni binarie bonus senza deposito è che sono un vero e proprio aggregato. Questo è l'effetto collaterale del fatto che non sono soggetti al deposito. Nel frattempo, i bonus di deposito nel trading binario possono variare tra 10% – 100% facendo affidamento sul tuo broker e sulle condizioni.

Condizioni di trading e prelievo

Scambio di prerequisiti e condizioni di recesso sono in generale eccezionalmente estremi per entrambi i tipi di bonus. Questi potrebbero ricordare limitazioni esplicite per quando i bonus possono essere rimossi. Le condizioni anche sul miglior trading binario gratuito senza bonus di deposito sono spesso eminentemente più difficili.

Spesso dovrai fare un giro d'affari più alto per i bonus senza deposito rispetto ai normali bonus con deposito.

Come è vantaggioso un bonus di benvenuto?

Nel momento in cui inizi a fare trading, o anche in un altro mercato, è concepibile che tu possa accontentarti di passi falsi e accontentarti di alcuni decisioni inaccettabili.

Tuttavia, quando ti abitui al sito commerciale e diventi più positivo su ciò che stai facendo, verranno prese decisioni determinate e vorrai effettivamente accontentarti di scelte migliori.

È oltre il regno dell'immaginazione aspettarsi di dire che tutti assaporano il successo senza fallo; però, commettere errori va bene, in particolare se hai una ricompensa su cui contare. Un bonus funziona come un pad per te. Ti dà spazio per mettere da parte uno sforzo per esplorare e imparare.

Commettere errori con un bonus di benvenuto implica che dovresti avere la stragrande maggioranza delle confusioni del principiante molto lontane per quanto riguarda il passare attraverso il soldi veri. Nel complesso, ci rendiamo conto che dai pasticci derivano i trionfi, quindi è tutt'altro che terribile farli partire subito e imparare.

Puoi utilizzare i tuoi fondi di investimento per fare ciò che sai e utilizzare i soldi del bonus per analizzare in un altro mercato. Questa è una scelta brillante. Il solitario vero divieto sono i tornei; non puoi utilizzare i bonus per puntare i tuoi biglietti d'ingresso in generale.

Tuttavia, utilizzando deliberatamente il tuo denaro gratuito per ottenere la nuova esperienza, ti stai liberando in un universo completamente diverso di potenziali flussi di benefici.

Cos'è un bonus broker di opzioni binarie?

Un bonus broker di opzioni binarie è il importo che un broker accredita virtualmente sul tuo conto di trading. Il tuo bonus broker è sempre una certa percentuale del tuo deposito nel tuo conto di trading.

| Buono a sapersi! |

| Un bonus broker di opzioni binarie è un modo per un broker di farlo mostrare il valore ai loro clienti. Dando un bonus al trader, un broker migliora il suo utilizzo dei fondi per negoziare opzioni binarie. |

Pertanto, i commercianti possono moltiplicare i loro guadagni utilizzando un bonus di deposito di opzioni binarie. Tutti i broker di opzioni binarie hanno diversi Termini e Condizioni per quanto riguarda i bonus di deposito. Di solito, la percentuale dei bonus sul deposito cresce man mano che i trader effettuano molti depositi sui loro conti di trading.

Ad esempio, i trader che si iscrivono a un conto di trading in oro su BinaryCent può arrivare fino a a 100% bonus sul deposito. Ciò significa che se fondi il tuo conto di trading sull'oro con $1.000, avrai $2.000 in

(Avviso di rischio: il tuo capitale può essere a rischio)

Tipi di broker di opzioni binarie bonus/bonus di deposito

Ecco alcuni dei principali tipi di bonus/bonus di deposito dei broker di opzioni binarie.

Il bonus sul deposito spiegato

Quando fondi il tuo conto di trading con un broker specifico, ti premiano per questo! Ma, ovviamente, il la ricompensa arriva in termini monetari in termini di bonus sul deposito.

Diversi broker hanno termini e condizioni differenti per aver utilizzato il loro bonus di deposito. Ad esempio, un trader può utilizzare l'importo del bonus accreditato sul suo conto di trading reale per negoziare opzioni binarie.

Tuttavia, i broker stabiliscono determinati termini e condizioni per il ritiro del bonus sul deposito. Di solito, qualsiasi trader deve farlo raggiungere un determinato volume di scambi ritirare il proprio bonus.

Bonus di deposito anche variano in base al tipo di account tu tieni. Inoltre, il loro percentuale potrebbe differire in base all'importo del deposito inserito. Ad esempio, BinaryCent offre un'alta percentuale di bonus sul deposito man mano che elevi il tuo tipo di account.

Regole e condizioni di un bonus di deposito

Un trader deve rispettare diversi termini e condizioni per rendere attivo il suo bonus di deposito. Eccotene alcune:

- Un commerciante deve registrarsi per a conto di trading reale con il broker da cui cerca di ottenere un bonus di deposito.

- È disponibile un bonus di deposito solo sull'importo depositato da un trader nel suo conto. Quindi, per usufruire di un bonus di deposito, devi finanzia il tuo conto.

- I commercianti possono ritirare il loro bonus sul deposito solo quando raggiungono un certo volume di scambi.

- Di solito, troverai il tuo bonus bloccato nel tuo account fino a quando non lo farai raggiungere la soglia delle transazioni.

- Un commerciante può utilizzare il bonus sul deposito per effettuare investimenti.

- Una volta lui guadagna profitti, può ritirare il suo bonus e il profitto.

Bonus senza deposito

Alcuni broker di opzioni binarie premiano i loro nuovi clienti con un bonus gratuito senza alcun deposito. Questo è chiamato "bonus senza deposito". Nella maggior parte dei casi, questi offrono un molto raro e limitato. Puoi visitare il nostro confronto per i bonus senza deposito per trovare le migliori offerte.

Bonus VIP

La maggior parte dei broker offre ai propri clienti la possibilità di aprire un Conto VIP. Succede quando raggiungono il desiderato volume degli scambi. In tal caso, diventano generalmente idonei per i bonus VIP più alto rispetto ad altri tipi di bonus.

Guarda alcuni altri broker con offerte bonus qui:

Broker:

Bonus:

vantaggi:

Account:

Bonus di deposito 70%+, bonus cashback, bonus fatturato, operazioni senza rischi

- Rendimento massimo

- Esecuzione più veloce

- Segnali

- Trading 24 ore su 24, 7 giorni su 7

- Demo gratuita

- $ 10 min. depositare

(Avviso di rischio: il tuo capitale può essere a rischio)

Bonus di deposito 50%+, operazioni senza rischi

- Bonus

- Trading 24 ore su 24, 7 giorni su 7

- Commercio sociale

- Demo gratuita

- $ 50 min. depositare

(Avviso di rischio: il tuo capitale può essere a rischio)

Bonus di deposito 50%, bonus senza deposito $ 100 con il codice "BOFREE"

- Facile da usare

- Download

- Assistenza 24 ore su 24, 7 giorni su 7

- Alto rendimento

- Demo gratuita

- $ 250 min. depositare

(Avviso di rischio: il tuo capitale può essere a rischio)

Conclusione: ci sono più offerte per i bonus senza deposito

In conclusione, ci sono più offerte per ottenere un bonus di opzioni binarie senza deposito gratuito. In questo articolo, ti abbiamo presentato i 4 migliori broker che offrono questo servizio. IQcent è di gran lunga la scelta migliore per ottenere il tuo bonus gratuito.

Offre un deposito minimo molto basso e puoi fare trading con importi minimi di pochi centesimi. Inoltre, la piattaforma di trading è molto intuitiva e riceverai assistenza personale 24 ore su 24, 7 giorni su 7. Se desideri utilizzare un bonus gratuito senza deposito, IQcent è la scelta migliore.

(Usa il codice promozionale “BOFREE”)

Le domande più frequenti sulle opzioni binarie bonus senza deposito:

Quale opzione binaria è la migliore per un bonus senza deposito?

Dalla nostra ricerca, IQcent è il miglior broker per un bonus senza deposito nel trading di opzioni binarie. Il broker offre un bonus senza deposito $ 100 gratuito. Sulla piattaforma, il rendimento arriva fino a 90%+ per operazione. I trader possono accedere a più di 100 mercati e possono anche negoziare CFD. Viene offerto un supporto personale, il che lo rende la migliore offerta per i trader!

Puoi ritirare un bonus senza verifica?

No, non puoi ritirare un bonus senza verifica. È contrario agli standard finanziari del riciclaggio di denaro non verificare il conto di un trader e non prelevare denaro. I broker di opzioni binarie richiedono ai trader di caricare una foto del tuo documento d'identità e talvolta richiedono di verificare il metodo di pagamento.

Un bonus senza deposito è gratis?

Sì, un bonus senza deposito è gratuito dopo la registrazione. Ma ci sono condizioni da parte del broker per l'importo del bonus. Dopo aver soddisfatto tali condizioni, puoi prelevare il denaro.

Ho bisogno di un codice speciale per garantire i miei bonus di benvenuto?

Sì, nel nostro confronto ogni broker richiede uno speciale codice promozionale per darti il bonus di benvenuto. Nel caso in cui un sito esterno ti fornisca un codice voucher per un broker specifico, devi utilizzarlo per avere la possibilità di garantire il tuo premio.

Posso registrarmi per un bonus broker di opzioni binarie?

Qualsiasi trader può registrarsi per un bonus broker di opzioni binarie una volta che si registra su una piattaforma di trading online. Un commerciante dovrebbe incontrare il Termini e Condizioni di ottenere il bonus del broker. Di solito, un commerciante deve soddisfare il importo minimo di deposito requisito durante la richiesta del bonus del broker.

Quale bonus del broker di opzioni binarie è il migliore?

Il bonus del broker di opzioni binarie offerto da Quotex, Pocket Option, IQCent, VideForex e BinaryCent è il migliore.

il codice promozionale non è valido per tutti i broker

L'abbiamo risolto, ora dovrebbe funzionare! Saluti

ciao, ho attivato il bonus senza deposito di 100 usd in acc reale, ma mostra il valore netto di 0$ sotto i 100 usd.