Birkaç yıl önce, insanlar ikili opsiyon ticareti yapmaya başladı. Ve bugün, para kazanmanın popüler bir yolu haline geldi. Bunun nedeni, bu ticaretin basit evet ve hayır önermesi üzerinde çalışmasıdır.

İkili opsiyon ticaretini yürütmek sadece kolay değil aynı zamanda anlaşılması da kolaydır. İster yeni ister profesyonel olsun, herhangi bir tüccar, bir öğenin fiyat hareketi üzerine spekülasyon yaparak büyük miktarda para kazanabilir.

sadeliğin yanında ikili opsiyon ticareti, tüccarların bu konuda sevdiği bir başka şey de kısa vadeli ticaret stratejisidir. 60 saniyelik strateji ile tüccarlar bir dakikadan daha kısa sürede büyük bir kârla piyasadan çıkabilirler.

Ancak 60 saniyelik ikili opsiyon stratejisi nedir? O nasıl çalışır? Faydaları ve sınırlamaları nelerdir? Peki, bu soruların tüm cevaplarını ve daha fazlasını bulmak için bu kılavuzu okuyabilirsiniz.

Bu Yazıda Okuyacaklarınız

(Risk uyarısı: Sermayeniz risk altında olabilir)

60 saniye ticareti nedir?

Altmış saniyelik ikili opsiyon ticareti, hızlı sonuçlar sunan piyasaya yatırım yapmanın bir yoludur. benPiyasada çok uzun süre kalmaksızın hızlı para kazanmak isteyen tüccarlar için mükemmeldir.. Ama bu ticaret kolay değil.

Tüccarların bir dakikadan daha kısa sürede piyasadan ayrılmaları gerektiğinden, piyasayı analiz etmeleri ve verilen varlığın fiyat hareketini doğru anlamaları gerekir. Çünkü küçük bir hata bile onlara çok büyük paralar kaybettirebilir.

Altmış saniye ticaret stratejisi, kısa vadeli bir ticaret stratejisi olarak da bilinir. Yeni tüccarlar bu ticaret tekniğinden uzak durmalıdır çünkü bu ticaret stratejisiyle para kaybetme riski daha fazladır.

60 saniyelik ticaret stratejisi ile şansınızı denemek istiyorsanız, güvenilir bir ticaret komisyoncusu seçmelisiniz. Çünkü bu ticarette her şey çok çabuk oluyor. Ve komisyoncu yavaşsa, gerçek zamanlı ticaret yapamayabilirsiniz.. Böylece kaybedersiniz.

Bu nedenle, bu ticaret yöntemine aşina olduğunuzda ve mükemmel bir komisyoncu bulduğunuzda, bir dakikalık ikili opsiyon ticaretini deneyebilirsiniz.

(Risk uyarısı: Sermayeniz risk altında olabilir)

60 saniye ticaret stratejisi nasıl çalışır?

Önce tam ticaret videoma bakın:

Altmış saniye ticareti diğerleriyle aynı şekilde çalışır ikili opsiyon ticareti. Burada tüccarın belirli bir varlığın fiyatının yükselip yükselmeyeceğini tahmin etmesi gerekir. Spekülasyonlara dayanarak, bir tüccarın hızlı bir şekilde harekete geçmesi gerekiyor.

60 saniyelik bir ikili opsiyon ticaretini kazanmak istiyorsanız net bir stratejiniz olmalıdır. Ek olarak, hızlı bir karar vermelisiniz. Bu ticaret stratejisi hızlı hamleler yapmanızı gerektirse de, her ticarete girmek için acele etmemek daha iyidir.. Çünkü o zaman önemli bir kayıp yaşayacaksınız.

Ticarete girmeden önce, trendi tespit etmek, fiyat hareketini takip etmek ve kazanma şansınızı anlamak için piyasayı analiz etmek çok önemlidir.

Örneğin, son iki dakika içinde fiyat trendinde iki aşağı hareket olduysa, satım işlemi yapabilirsiniz.. Bu şekilde, ticareti kazanma şansınız artar. Benzer şekilde, iki veya daha fazla yukarı hareket varsa, bir arama ticareti seçebilirsiniz. İşleminiz "Parada" sona ererse, yatırdığınız tutardan yaklaşık 70% ila 90% getiri elde edersiniz. Ancak, işleminiz başarısız olursa, aynı miktarda parayı kaybedersiniz.

En iyi 60 saniye ticaret stratejisi

Ek olarak, şu videoyu izleyin:

Bir dakikalık dünya ikili opsiyon ticareti çok hızlı hareket eder. Ve kazanma yönünde ilerlemek için sağlam ve ayrıntılı bir plan geliştirmelisiniz.

En iyi bir dakikalık ikili opsiyon stratejisi yoktur, ancak destek ve direnç kullanarak ticaret dünyasının kesin ayrıntılarını alabilirsiniz. Bu kural, bir varlığın fiyatının her zaman orijinal değerine geri döndüğünü söyler.

Destek ve direnç seviyesi, belirli bir varlığın fiyatının hareket ettiği bir limit içindeki çerçevedir. Her zaman dilimi için farklı seviyeler vardır. Zaman çerçevesi daha kısaysa, emtia fiyatı ötesine geçecektir.

Tüccarlar ayrıca bir 60 saniye ikili opsiyon ticareti yapmak için mum grafiği. Bir mum grafiği aracılığıyla, tüccarlar emtiaların fiyat eğilimini daha iyi görebilirler.

Bir ticaret stratejisi bulduktan sonra, ona bağlı kaldığınızdan emin olun. Ticaret hakkında ne düşünürseniz düşünün, rakamlar ve göstergeler bir şey söylüyorsa, göz ardı etmeyin. Bu, maliyetli hatalardan kaçınmanıza yardımcı olacaktır.

60 Saniyelik İkili Opsiyon stratejileri:

Birden fazla ticaret stratejisine sahip olmak, kararların duygusal olarak alınmamasını sağlar.

Bunun yerine, bir strateji, fayda sağlayan belirli bir hesaplanmış eylem planına sahip olmayı ifade eder. Tüm bunları kaybetme arzusu ve korkusu, zor kazanılan paranızı yatırırken ve bir stratejiye sahipken ortaya çıkan yaygın duygulardır. Bunun yerine, güven ve hesaplanmış bir risk alma yeteneği yaratır.

İkili opsiyon ticareti yaparken, daha da fazlası strateji kullanmak önemli. Yazılımın kullanımı kolay olsa da, kötü kararlar verirseniz veya yanlış işlemleri seçerseniz yine de çok para kaybedebilirsiniz.

Strateji ile 60 saniyelik ticaret seansımdan birine göz atın:

1. Destek ve direnç stratejisi

Varlıkların fiyatı, her biri bir tepeye ve bir dibe sahip olan bir dizi dalga halinde ilerleme eğilimine sahiptir. Bu kısıtlamalar şu şekilde değerlendirilir: büyük geri dönüş temel destek ve direnç seviyeleri ile kolayca tanımlanabilen seviyeler. Favori bir 60 saniye stratejisi, fiyatın bu direnç ve destek seviyelerine karşı açıkça toparlandığı zamanları belirlemektir. Yeni ikili opsiyonlar daha sonra fiyatın toparlanmadan önce ilerlediği yönün tersi yönünde açılmalıdır.

Örneğin, sonraki GBP/USD 60 saniyelik işlem tablosu, hem CALL hem de PUT ikili opsiyonlarının ne zaman yürütüleceğine dair iyi örnekler sunar. Esasen, fiyat dirence karşı toparlandığında, bir PUT seçeneğini etkinleştirmelisiniz. Benzer şekilde, destek vurulduktan sonra fiyat yükselirse, bir CALL ikili opsiyonu açmalısınız.

Böyle bir şeyi kışkırtmak için ilk adımınız ikili opsiyon stratejisi olan bir döviz çiftini tespit etmektir. menzil ticareti uzun bir süre için. Ardından, komisyoncunuzun bilgilerini kullanarak veya direnç için en yüksek noktaları ve en düşük değerleri birleştirerek direnç ve destek seviyelerini belirlemelisiniz. destekler için, yukarıdaki grafikte gösterildiği gibi.

Bu seviyelerden birinin fiyat testini gözlemledikten sonra, mevcut mum çubuğunun direncin altında veya desteğin üzerinde temiz bir şekilde kapanarak gerçek bir sıçrama onaylamasını bekleyin. Bu eylem, yanlış sinyallere karşı size bir miktar koruma sağlayacaktır. Örneğin, başarılı bir onay elde edilirse, yukarıdaki grafikte gösterildiği gibi fiyat dirence karşı sınırlanırsa, dayanak varlık olarak GBP/USD'yi kullanarak 1 dakikalık bir sona erme süresi olan yeni bir PUT ikili opsiyonu açın.

75% ödeme ile $100 oynayarak, yukarıda gösterilen her iki PUT seçeneği için $75 toplamış olursunuz. Aslında, ilk $100 bahsiniz katlanarak artacaktı. $937 Her durumda getirilerinizi yeniden yatırmış olsaydınız, yukarıda gösterilen dört işlem için 5 saat içinde.

2. Trend stratejisini takip edin

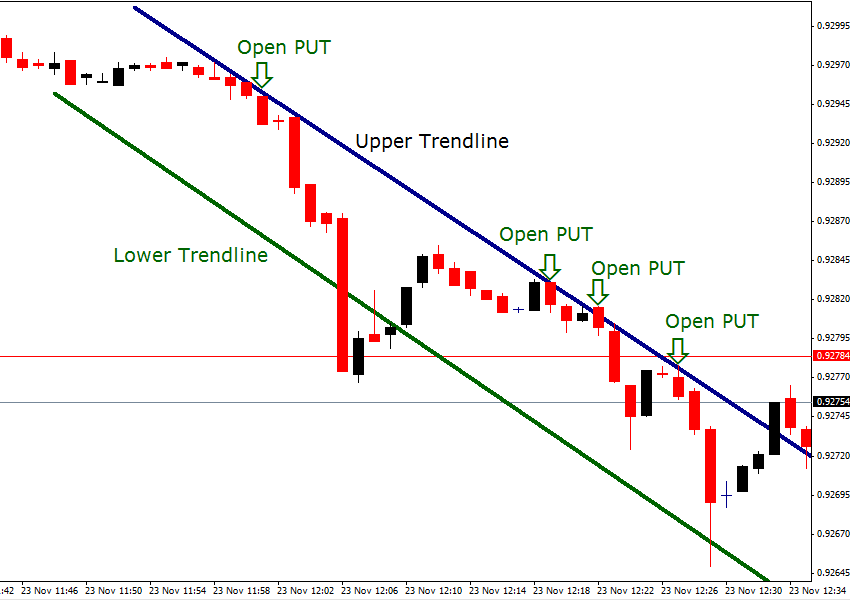

Son zamanlarda popülerlik kazanan bir başka 60 saniye stratejisi, trendleri izlemeye dayanmaktadır. Bunun nedeni, bu tür stratejilerin, trendle ticaret yapmanın avantajından yararlanmanıza izin vermesi ve bu nedenle, 'trend sizin arkadaşınızdır' diyen ünlü özdeyişle uyumlu olmasıdır. Temel fikir, temeldeki menkul kıymet iyi kurulmuş bir yükseliş geçişi içinde tırmanırken, fiyat alt trend çizgisinden daha yükseğe sıçrarsa, bir trendi takip etmek ve bir 'ÇAĞRI' ikili opsiyonu yürütmektir. Buna karşılık, iyi tanımlanmış bir düşüş eğiliminde üst trend çizgisine ulaştıktan sonra fiyat aşağı doğru toparlandığında PUT ikili opsiyonlarını etkinleştirmelisiniz. kanal.

Örneğin, USDCHF döviz çifti için yukarıdaki 1 dakikalık işlem grafiği, güçlü bir düşüş eğilimi gösteriyor. Bu diyagramı incelerken onaylayabileceğiniz gibi, fiyat üst trend çizgisine göre daha da düştükten sonra PUT opsiyonlarını açmak için dört fırsat ortaya çıktı.

Bir trend stratejisi başlatmak için önce bir süredir yükseliş veya düşüş eğiliminde işlem gören bir varlığı bulmalısınız. Daha sonra, yukarıdaki grafikte gösterildiği gibi, bir düşüş kanalı olması durumunda, üst trend çizgisi için alt yüksekler serisini ve alt trend çizgisi için alt dipleri birleştirerek trend çizgilerini çizmeniz gerekir.

Üst trend çizgisinin fiyat testini gözlemlediğinizde, mevcut mum çubuğu tamamen oluşana kadar duraklamalısınız, böylece bu seviyenin altında kapandığını doğrulayabilirsiniz. Varsa, 1 dakikalık vade süresi olan USD/CHF'yi dayanak varlık olarak kullanarak yeni bir PUT seçeneği başlatın. Bahsinizin $5.000 ve ödeme oranının 75% olduğunu düşünün. Yukarıdaki çizelgede tanımlanan dört başarılı işlem, her seferinde karınızı yeniden yatırırsanız, 2 saatten biraz fazla bir süre içinde size şaşırtıcı bir $46,890 net kazandıracaktı. Şimdi, neden bu kadar çok tüccarın 60 saniyelik ikili opsiyonlar hakkında çılgına döndüğünü anlamaya başlayabilirsiniz.

3. Koparma Stratejisi

En sevdiğim 60 saniye strateji ticaret araları çünkü tespit edilmeleri kolaydır ve etkileyici getiriler sağlayabilirler. Bu yöntemin ana fikri, eğer bir varlığın fiyatı sınırlı bir aralıkta geniş bir süre salınıyorsa, o zaman patlak verecek kadar ivme kazandığında, sık sık önemli bir süre boyunca seçtiği yönde hareket eder.

Bu tekniği uygulamadaki ilk adımınız, geniş bir süre boyunca sınırlı bir aralıkta dalgalanan bir varlık çiftini belirlemektir. Bu nedenle, yukarıdaki AUD/USD 60 saniyelik grafik diyagramında gösterildiği gibi, açıkça alt ve üst olarak tanımlanan bir yan yönlü ticaret modeli arıyorsunuz. Çoğu zaman, fiyat, yukarıdaki şekilde tekrar gösterildiği gibi, nihayet serbest kalmadan önce birçok kez zemine ve tavana çarpacaktır. A sürekli kopma daha sonra yeni bir ticaret başlatmak için güçlü bir tavsiye olarak değerlendirilmelidir.

Yukarıdaki diyagramın gösterdiği gibi, fiyat, desteğinin veya tabanının altında net bir kırılma elde ediyor. Şimdi mevcut 60 saniyeye kadar beklemeniz önerilir. şamdan Kapanış değerinin inkar edilemez bir şekilde önceki işlem aralığının alt seviyesinin altında olduğunu onaylayabilmeniz için tamamen oluşturulmuştur. Bu doğrulama, yanlış bir sinyale karşı size bir miktar koruma sağlayacaktır.

Bu hedefi gerçekleştirdikten sonra, şimdi 60 saniyelik bir vade ile AUD/USD bazında yeni bir 'PUT' ikili opsiyonu açmalısınız. Bu ticaret şekli kesinlikle dinamik olduğundan, 2%'den fazla risk almayın. Eşitlik pozisyon başına. Öz sermayeniz $10,000 ise, bahsiniz sadece $200 olmalıdır. Açılış fiyatınız 1.0385; ödeme oranınız 80% ve geri ödemeniz 5%'dir. Bir dakikalık süre bitiminden sonra zaman sona erdiğinde, AUDUSD 1.0375'te duruyor; “parada”sınız ve $160 toplayın.

Göstergeler ile 60 Saniye İkili Opsiyonlar:

İşte bir birkaç popüler ikili opsiyon göstergesi 60 saniyelik ikili opsiyon ticareti için kullanabileceğiniz.

1. Göreceli güç endeksi

Mevcut tüm göstergeler arasında, RSI (Göreceli Güç Endeksi), 60 saniyelik ikili opsiyon ticareti için en güvenilir olanıdır. Bu enstrümanı kullanarak, bir tüccar bir dakika içinde kazanan bir ticaret yapabilir..

Bu teknik analiz aracı, piyasadaki olası her türlü trend değişikliğini gösterir. RSI, aşırı alım ve aşırı satım olmak üzere iki farklı alana sahiptir., tam teknik analizin dayandığı.

RSI'da, eğri grafiği aşırı satım alanından aşağıdan yukarıya doğru çıkıyorsa, bu satın alma, yani arama için işlem yapabileceğiniz anlamına gelir. Benzer şekilde, eğri aşırı alım bölgesinden çıkarsa, düşmek için ticaret yapabilirsiniz, yani koyun.

Kazanma şansınızı artırmak için aşağıdaki ayarları kullanabilirsiniz. Grafik süresi - 10 saniye, RSI ayarı - 18, aşırı alım - 70%, aşırı satım - 30% ve opsiyon süresi - 1 dakika.

2. Ichimoku

RSI'nin yanı sıra, 60 saniyelik ikili opsiyon ticareti için kullanılan bir diğer popüler gösterge Ichimoku'dur. Bu gösterge bulutlar açısından görülür. Gösterge eğrisi aşağıdan yukarıya doğru iki kez buluttan çıkarsa, tüccarlar satım opsiyonunu satın alabilir.

Bu durumun tersi olursa, trader'lar call opsiyonunu satın alabilirler. Fiyat aynı yönde hareket etmeye devam ederse, tüccarlar önemli bir kar elde edecek.

3. Bollinger Bantları Göstergesi

Yatırımcıların 60 saniyelik ikili opsiyon ticareti için kullanabilecekleri bir sonraki gösterge Bollinger Markaları Göstergesidir. Bu gösterge, tüccarın bir varlığın fiyatı hareket ederken bir mola vermesine yardımcı olur.

Göstergeler olmadan 60 saniye ikili opsiyon:

Bazı tüccarlar 60 saniyelik ikili opsiyon ticareti yapmak için göstergeleri tercih ederken, bazı stratejiler göstergelere ihtiyaç duymaz. Herhangi bir gösterge kullanmadan işlem yapmak istiyorsanız, işlem tabloları aracılığıyla piyasayı doğru bir şekilde analiz etmelisiniz.

60 saniye İkili Opsiyon ticaretinin önemli yönü

Ticarete başlamadan önce, bir komisyoncu seçmeniz ve kendinizi ona kaydettirmeniz çok önemlidir. Kaydolduktan hemen sonra, gerçek parayla ticaret yapmak akıllıca değildir.. 60 saniyelik ticareti anlamak için sahte parayı kullanabilirsiniz.

Demo hesabında işlem yapmak, platformun ve arayüzünün ne kadar güvenilir olduğunu bilmenize yardımcı olacaktır.. Ayrıca, komisyoncunuzun hesabında bulunan miktarın 2%'sinden fazlası için 60 saniyelik ikili opsiyon ticareti yapmanız önerilmez.

60 Saniye İkili Opsiyonlu Brokerler şunları sunar:

60 saniyelik ikili opsiyon ticaretinden kar elde etmek için güvenilir bir komisyoncu ile ticaret yapmak gerekir. İşte bazı güvenilir ikili opsiyon brokerleri ndan şeçmek.

100+ Piyasa

- Min. $10 yatır

- $10,000 demo

- Profesyonel platform

- 95%'ye kadar yüksek kar

- Hızlı para çekme

- sinyaller

100+ Piyasa

- Uluslararası müşterileri kabul eder

- Yüksek ödemeler 95%+

- Profesyonel platform

- Hızlı para yatırma

- Sosyal Ticaret

- ücretsiz bonuslar

100 Piyasa

- Uluslararası müşterileri kabul eder

- 7/24 destek

- İkili ve CFD'ler

- Yüksek getiri

- ücretsiz bonus

- TradingView grafikleri

100+ Piyasa

- Min. $10 yatır

- $10,000 demo

- Profesyonel platform

- 95%'ye kadar yüksek kar

- Hızlı para çekme

- sinyaller

itibaren $10

(Risk uyarısı: Alım satım risklidir)

100+ Piyasa

- Uluslararası müşterileri kabul eder

- Yüksek ödemeler 95%+

- Profesyonel platform

- Hızlı para yatırma

- Sosyal Ticaret

- ücretsiz bonuslar

itibaren $50

(Risk uyarısı: Alım satım risklidir)

100 Piyasa

- Uluslararası müşterileri kabul eder

- 7/24 destek

- İkili ve CFD'ler

- Yüksek getiri

- ücretsiz bonus

- TradingView grafikleri

itibaren $250

(Sermayeniz risk altında olabilir)

1. Quotex

Quotex bilinen harika bir ikili opsiyon komisyoncusudur. inanılmaz ticaret hizmetleri. Tüccarlar Quotex'ye herhangi bir güçlük çekmeden kaydolabilirler. Bu ikili opsiyon komisyoncusu ikili opsiyon ticareti yapmak için harika tüccarların erişmesine izin verdiği için birçok temel varlık.

| Bunu bildiğim iyi oldu! |

| Quotex'nin temel ticaret piyasalarına erişimi harika. Bu 60 saniyelik ikili opsiyon komisyoncusu, tacirlerin ticaretten en iyi şekilde yararlanmak. Ayrıca bu platformda ayrıca birkaç ticaret aracı tacirlerin teknik analiz yapmak için kullanabilecekleri. |

60 saniyelik ikili opsiyon ticareti, eğlence Quotex'de aşağıdaki nedenlerden dolayı:

- 60 saniyelik ikili opsiyon ticaretini bu platforma yerleştirmek, herhangi bir tüccar için kolay.

- Bir tüccar herhangi bir ödeme yöntemi seçin alım satım yapmak için alım satım hesabını Quotex ile finanse etmek.

- Tüccarlar ayrıca erişim avantajlarından da yararlanabilirler. ticaret göstergeleri ticaret yapmadan önce teknik analiz yapmak.

- Bu 60 saniyelik ikili opsiyon komisyoncusu, yatırımcıların Quotex demo hesabını kullanmalarına olanak tanır. Bir tüccar, 60 saniyelik ikili opsiyon ticaretinin nasıl yapılacağını öğrenmek için demo ticaret hesabını kullanabilir. Ayrıca, nasıl yapılacağını da öğreniyorlar fonlarını yönetmek ve sanatı risk yönetimi.

- Bunun dışında Quotex, tüccarların çok fazla kazanç elde etmesini de sağlar. araştırma ve eğitim materyali, bu da onlara 60 saniyelik ikili opsiyonları karla ticaret yapmalarına yardımcı olur.

Yani herhangi bir yatırımcı, bu 60 saniyelik ikili opsiyon brokerının yardımıyla dakikalar içinde para kazanmanın tadını çıkarabilir. Ayrıca yatırımcılar Quotex uygulamasında da işlem yapmanın keyfini çıkarabilirler. Yani her açıdan bakıldığında Quotex en iyi komisyoncu herhangi bir tüccar arzu edebilir.

(Risk uyarısı: Sermayeniz risk altında olabilir)

2. Pocket Option

Bir başka harika 60 saniyelik ikili opsiyon komisyoncusu da Pocket Option. Pocket Option her türlü alım satımı yapar kolay ve eğlenceli tüccarlar için. 60 saniyelik ikili opsiyon ticareti veya 5 dakikalık ticaret olsun, bir tüccar şunları yapabilir: zahmetsizce yerleştirin.

| Bunu bildiğim iyi oldu! |

| Pocket Option, güvenilir hizmetler. İkili opsiyon ticaretine başlıyorsanız, Pocket Option ile başlayabilirsiniz. 60 saniye ikili opsiyon ticareti yapmak isteseniz bile, bir ticaret hesabına kaydolabilirsiniz. sadece $50 minimum depozito. |

Pocket Option'yi mükemmel yapan bazı özellikler harika 60 saniyelik ikili opsiyon komisyoncusu aşağıdaki gibidir:

- Tüccarlar bulabilir birçok temel varlık Pocket Option'de, tıpkı Quotex'de olduğu gibi.

- Bu 60 saniyelik ikili opsiyon komisyoncusu, yatırımcıların birkaç ticaret platformu. Böylece, uzmanlığınıza dayalı bir platform seçerek 60 saniyelik ikili opsiyon ticaretini daha da eğlenceli hale getirebilirsiniz.

- Tüccarlar, aşağıdakiler gibi birçok ticaret göstergesine sahiptir: Bollinger Bantları, MACD, vb., onların emrinde. Ticaret deneyimlerini kolaylaştırmak için bu ticaret göstergelerini ve diğer araçları kullanabilirler.

- Tüccarlar ayrıca birkaç tane bulabilir eğitim videoları vb., Pocket Option'de.

- Platform ayrıca yatırımcıların kullanımına açık olan Pocket Option demo hesabını da sunuyor 30 gün boyunca.

(Risk uyarısı: Sermayeniz risk altında olabilir)

3. BinaryCent

Bu 60 saniyelik ikili opsiyon komisyoncusu, tacirlerini bir dakika içinde açıp kapatmak isteyen tacirler için harikadır. BinaryCent tüccarların tanık olmasına izin verir en hızlı yolların en iyisi 60 saniyelik opsiyon ticaretinin keyfini çıkarmak için. Bu 60 saniyelik ikili opsiyon komisyoncusu ile şunları yapabilirsiniz: kolay ve hızlı para kazanmak.

| Bunu bildiğim iyi oldu! |

| BinaryCent yatırımcılara şunları sunar: güvenilir ve sezgisel çevrimiçi ticaret platformu. Ayrıca bir ile başlayarak ticaretin tadını çıkarabilirsiniz. küçük minimum depozito miktarı. Ayrıca, ticaret platformu şunları sunar: bol miktarda hisse senedi, emtia vb., tüccarlara. Bu nedenle, işlemlerinizi çeşitlendirmek istiyorsanız BinaryCent harika bir ticaret platformudur. |

Burada bazı özellikler BinaryCent'in 60 saniyelik harika bir ikili opsiyon komisyoncusu olması:

- BinaryCent, özellik açısından harika bir ikili opsiyon ticaret platformudur. sahip bir tüccarın seveceği tüm özellikler platformlarında olması.

- Tüm lider teknik araçlar ve göstergeler BinaryCent ile kullanılabilir. Bu nedenle, tacirler bu 60 saniyelik ikili opsiyon komisyoncusu ile ikili opsiyon ticaretinde güçlük çekmezler.

- BinaryCent ile ikili opsiyon ticareti çekici tüccarlara, çünkü tüm bunları kullanmanın keyfini çıkarabilirler. birinci sınıf ticaret araçları.

- BinaryCent, tacirlere demo hesap. 60 saniyelik ikili opsiyon işlemlerini açma ve kapama alıştırması yapabilirsiniz.

- Ayrıca, diğer ticaret platformları gibi BinaryCent de yatırımcıların önde gelen eğitim kaynakları.

Bu nedenle, ikili opsiyon ticareti, tüccarlar için eğlencelidir, eğer bilirlerse doğru taktikler. Ayrıca, bir tacirin tanınmış bir komisyoncuda bir ticaret hesabı olması durumunda işler daha da iyi hale gelir.

Bu nedenle, bir tüccar 60 saniyelik ikili opsiyon ticareti yaparak para kazanmaya hevesliyse, bu üç ikili opsiyon brokerinden birini seçin.

(Risk uyarısı: Sermayeniz risk altında olabilir)

60 saniyelik İkili Opsiyon Stratejisini kimler uygulamalı?

60 saniyelik ikili opsiyon ticareti yaparken para kaybetmemek için bu tüccarlardan biri olduğunuzdan emin olun.

- Küçük Vadeli Tüccarlar: Kısa vadeli ve piyasada uzun süre kalmak istemeyen biriyseniz, 60 saniyelik ticaret stratejisi tam size göre.

- Yüksek Uçucu Varlık Tüccarı: Kripto para birimleri gibi yüksek volatiliteye sahip varlıkların alım satımını seviyorsanız, 60 saniyelik alım satım stratejisinden yardım alabilirsiniz. Ancak varlığa aşina değilseniz, harekete geçmeden önce iki kez düşünmelisiniz.

- Küçük Pazar Hareketi Yasası: Alım satım hareketleriniz küçük piyasa hareketlerine bağlıysa, 60 saniyelik ikili opsiyon alım satımını kullanabilirsiniz. Çünkü piyasadaki küçük iniş çıkışlar büyük paralar kazanmanıza yardımcı olabilir.

- Yüksek Frekanslı Tüccarlar: Günde birden çok kez ticaret yapıyorsanız, piyasadan hızlı bir şekilde çıkmak için 60 saniyelik ikili opsiyon ticaret stratejisini kullanabilirsiniz.

60 saniye ticaret stratejisinden kim kaçınmalı? - Avantajlar dezavantajlar

60 saniye ticaret stratejisi popüler hale gelmesine rağmen, her tüccar için değil. Aşağıdaki tüccarlardan biriyseniz, 60 saniyelik ikili opsiyon ticaretini kullanmamalısınız.

- Yeni Tüccarlar: İkili opsiyon ticareti dünyasında yeniyseniz, 60 saniyelik ticaret stratejisi kullanmaktan kaçınmalısınız. Bunun nedeni, piyasa hakkında derin bir anlayışa sahip olmamanız olabilir. Ve uygun bilgi olmadan kaybedersiniz.

- Uzun Vadeli Tüccarlar: Uzun vadeli bir tüccarsanız, 60 saniyelik ticaret size göre değildir. Bu ticaret stratejisiyle yalnızca küçük karlar elde edebilirsiniz.

- Daha Yüksek Ödemelerle İlgileniyor: Daha yüksek ödemelerle ilgileniyorsanız, 60 saniyelik ikili opsiyon işlemleri yerine uzun vadeli işlemlere yatırım yapmayı denemelisiniz. Bunun nedeni, küçük vadeli işlemlerin ödeme oranının, uzun vadeli işlemlerden 10% ile 20% arasında daha düşük olmasıdır.

- İşlem, İşlem Sinyaline Bağlıdır: Herhangi bir alım satım sinyalini takip ederseniz, 60 saniyelik ikili opsiyon alım satımından kaçınmalısınız.

60 saniye ticaret stratejisinin avantajları

- Bir günde birden çok kez ticaret yapabilirsiniz.

- Ticaret yapmak için farklı varlıklar elde edersiniz.

- Altmış saniyelik ikili opsiyon ticaret stratejisi, daha iyi bir ticaret deneyimi sunar.

- Küçük piyasa hareketlerinden en iyi şekilde yararlanabilirsiniz.

60 saniyelik ticaret stratejisinin dezavantajları

- 60 saniyelik ticaret stratejisinin önemli bir dezavantajı, riskli olmasıdır.

- Varlıkların doğru fiyat hareketini tahmin etmek zor.

- Aşırı ticaret, büyük miktarda para kaybetmenize neden olabilir.

Oran tabanlı para yönetimini kullanın

Orana dayalı bir yöntem şunları belirler: paranın yüzde kaçı Ne kadar paranız olduğuna göre yatırım yapmalısınız. Orana dayalı para kontrolü yöntemine sahip olmak akıllıca bir karardır ve başlarken iyi bir eylem kaynağıdır.

Bu yöntem, hesabınızda ne kadar para bulunduğuna bağlı olarak bir ticarete ne kadar eklenmesi gerektiğini gördüğü için biraz daha az tehlikelidir.

Bu stratejiyi uygularken, önce tehlikeye atmaya hazır olduğunuz fonların oranını düşünmeli ve karar vermelisiniz. Genel olarak, tüccarlar 1% veya 2% hakkında karar verir; ancak, deneyim sahibi profesyonel tüccarlar bazen sermayelerinin 5%'sini riske atmayı tercih ederler.

Para kaybederseniz, hesabınızda daha az para olacağı için her zaman bir sonraki işleme yatırım yapma seçeneğiniz olacaktır.

Ancak bu aynı zamanda hesabınızda her zaman paranız olduğu ve her başarılı işlemden sonra daha yüksek bir sermaye payı seçebileceğiniz anlamına gelir. Bu yüzdeye dayalı yöntem, tutarlı bir şekilde kâr etmenizi sağlamaya yardımcı olur.

60 Saniyelik İkili Opsiyon Ticareti için ticaret tavsiyesi

- Yalnızca göze alabileceğiniz riski üstlenin; genel olarak konuşursak, sen tek bir işlemde sermayenizin 1%'sinden fazlasını riske atmamalısınız.

- Seç doğru komisyoncu; tüm brokerlar ikili opsiyon ticareti yapma olanağı sağlamaz. Faydalanmak demo hesaplar mevcut olduğunda ve davranış kapsamlı araştırma.

- Kullan ücretsiz araçlar gibi hizmetinizde sinyaller. Sinyaller piyasa katalizörü görevi görür ve fiyat değişikliklerinin mükemmel tahmincileri olabilir.

- Aşırı ticaret yapmaktan kaçının; kontrolü kaybetmek çok kolaydır. Saatte $3.000 kazanabiliyorsanız neden $5.000'i hedeflemeyesiniz diye sorabilirsiniz? Yanlış. İyi bir kurulum oluşturmak zaman alır.

- Egzersiz yapmak sabır, planınıza güvenin ve baskı altında soğukkanlılığınızı koruyun.

Bir Dakikalık İkili Opsiyonların Ödülleri

yeteneği hızla kazanmak kısa ticaret penceresinin ana faydasıdır. özünde, yapabilirsiniz her fiyat değişiminden kazanç. Bununla birlikte, daha kısa pencerenin de daha yüksek bir riski vardır. Bu nedenle ödüller, örneğin bir günlük pencereye kıyasla daha yüksektir.

Ek olarak, şunları yapmanıza izin verilir: aynı anda birçok anlaşmayı yürütün. Son olarak, 60 saniyelik ikili opsiyonlar basit bir teklif sunar. kazan ya da kaybet önermesi, onları anlaşılması çok basit varlıklar haline getiriyor.

(Risk uyarısı: Sermayeniz risk altında olabilir)

Bir dakikalık ikili seçeneklerin riskleri

Yine de tehlikeler göz ardı edilmemelidir. Hisse senetlerine, dövize veya emtialara yatırım yapmakla karşılaştırıldığında, ikili opsiyon ticareti daha riskli olabilir. bir atmosfer yüksek basınç ayrıca daha küçük pencere tarafından üretilir. Sonuç olarak, bir dizi önemli karar hızla alınmalıdır.

60 saniye penceresi muhtemelen ticarete başlamak için en iyi yer değil veya her ikisinde de yeni olan kişiler için ikili opsiyon sözleşmelerini kullanmak. Daha uzun bir süre, hatanızı belirlemeniz ve en önemlisi düzeltmeniz için size daha fazla fırsat verir. çok daha fazlası iyileşmesi zor Daha kısa pencerede işlem yapıyorsanız ve bir dizi hata yapıyorsanız. Bu nedenle, bu belirli pencerede nasıl ticaret yapılacağını öğrenmek önemlidir. Bu özel bağlamda standart yaklaşımınızı kullanmaya çalışmadan önce iki kez düşünün.

Ayrıca, tüccarların bunun farkında olması gerekir tüm brokerlar ikili opsiyon ticareti sağlamaz. Bu nedenle, genel merkeziniz ister ABD'de, ister Birleşik Krallık'ta veya tamamen başka bir yerde olun, dikkatli olun: yasal olduklarını onaylayın ticaret yapmak istediğiniz yer.

1 dakikalık ticaret için yürütme hızının önemi

Yürütme hızı, arasındaki kısa süreyi ifade eder. açılış emri komisyoncu ile ve diğer uçtan dönüş almak.

Yürütme hızı şu şekilde temsil edilir: milisaniye. bu değer değişir ticaret platformunun kalitesine ve aracıların likidite sunucularının sayısına bağlı olarak platformdan platforma.

' 'ya sahip olsaydınız yardımcı olurduping' ile yürütme hızını kontrol edin -Quotex, Pocket Option ve Binarycent gibi brokerlerinizden.

İlgili brokerlerin 'ping'ini doğrulamak için bir makale.

Sonuç: 60 saniyelik ticaret harika bir stratejidir

Altmış saniyelik ikili opsiyon ticaret stratejisi, bir dakikadan kısa sürede büyük karlar elde etmenin mükemmel bir yoludur. Ancak bu ticaret stratejisini kullanarak kazanma şansınızı artırmak için piyasayı doğru analiz etmek çok önemlidir.

Kayıptan uzak durmak için detaylı bir strateji geliştirebilirsiniz. Ayrıca, aşırı alım satım yapmaktan kaçınmalısınız.

(Risk uyarısı: Sermayeniz risk altında olabilir)

SSS – Sık sorulan sorular yaklaşık 60 saniyelik ticaret:

İkili opsiyon ticaretinde kar elde etmenin en hızlı yolu nedir?

İkili opsiyon ticareti yaparken kar elde etmenin en hızlı yolu 60 saniyelik alım satım yapmaktır. 60 saniyelik işlemler yapan bir tüccar, banka hesap bakiyesini anında artırın her dakika ticaret yapabildiğinden.

Bir tacir nasıl 60 saniyelik ikili opsiyon ticareti yapabilir?

Bir tacir 60 saniyelik ikili opsiyon ticareti yapabilir. bir komisyoncu seçmek, bir dayanak varlık seçmek ve işlemlerini yapmak.

Bir tüccar 60 saniyelik ikili opsiyon ticareti yapmak için hangi komisyoncuya kaydolmalıdır?

Bir tüccar kaydolabilir Quotex, Pocket Option veya BinaryCent 60 saniyelik işlemler yapmak için.

60 Saniye İkili Opsiyon tüccarları ne kadar başarılı?

İkili opsiyon ticaretini ilan eden, bunu yüksek bir ödül olarak görüyor. Genellikle iyi bir yatırım getirisi elde etmek uygulanabilir. Bu yüksek ortalama getiri, bu tür ticareti birçok uzman ve yeni başlayanlar için çekici kılmaktadır.

60 Saniyelik bir ticareti genişletebilir miyim?

Cevap Hayır. Adından da anlaşılacağı gibi, 60 saniyelik ticaretin arkasındaki fikir, kar veya zarar yapmak için yalnızca bir dakikanız olduğudur. Bu nedenle, 60 Saniyelik bir işlemde vade süresini artırmanızı söyleyecek herhangi bir Broker bulmak çok şüphelidir çünkü bu işlemler bu şekilde organize edilmiştir.

Bu nedenle, çok sayıda uzun vadeli anlaşma almak istiyorsanız, her zaman çok sayıda uzun vadeli anlaşmayı tercih edebilirsiniz.

60 saniyelik işlemleri test edebilir miyim?

60 saniyelik ikili opsiyonları hemen gerçek para alım satım ortamına sokmak yerine ilk defa alım satım yapmayı düşündüğünüzde, hayali parayla bazı demo alım satımlar yapmak çok eğlenceli olacaktır. İşte bunu nasıl deneyebileceğiniz ve gerçek parayı da kaybetmeyeceğiniz aşağıda açıklanmıştır.