Binary Options trading allows traders of all skill levels to speculate on markets easily. However, the ease of trading these financial instruments provides is only one of many advantages they offer.

Perhaps the most significant advantage of trading binaries is the limited downside risk they offer regardless of whether you’re buying or selling. So you always know how much you stand to gain (or lose).

However, to trade binaries, you first have to open Binary Options account with a broker. There is no shortage of binary options brokers, but there are only a handful of recognized ones that traders prefer signing up with.

The account creation process may differ slightly from binary broker to broker, but the steps you need to follow are essentially the same. Here’s a guide on how to finish binary options registration and get started with Binary Trading.

How to open a Binary Options account:

You can start trading with a trusted Binary Options broker by completing the Binary Options registration in four easy steps.

Step #1: Get a device connected to the Internet

Binary options are instruments that are most commonly traded over online traded binary platforms. To create an account and subsequently speculate, you will need access to a computer or a phone connected to the internet.

Besides having a phone or a computer that can connect to the internet, you must have a stable internet connection. Consider getting a connection from a dependable internet service provider since slow internet speeds and disconnections can cost you money, for example, if you do not exit a trade-in time.

Step #2: Choose a trusted Binary Options Broker

100+ Markets

- Accepts international clients

- High payouts 95%+

- Professional platform

- Fast deposits

- Social Trading

- Free bonuses

100+ Markets

- Min. deposit $10

- $10,000 demo

- Professional platform

- High profit up to 95%

- Fast withdrawals

- Signals

300+ Markets

- $10 minimum deposit

- Free demo account

- High return up to 100% (in case of a correct prediction)

- The platform is easy to use

- 24/7 support

100+ Markets

- Accepts international clients

- High payouts 95%+

- Professional platform

- Fast deposits

- Social Trading

- Free bonuses

from $50

(Risk warning: Trading is risky)

100+ Markets

- Min. deposit $10

- $10,000 demo

- Professional platform

- High profit up to 95%

- Fast withdrawals

- Signals

from $10

(Risk warning: Trading is risky)

300+ Markets

- $10 minimum deposit

- Free demo account

- High return up to 100% (in case of a correct prediction)

- The platform is easy to use

- 24/7 support

from $10

(Risk warning: Your capital might be at risk)

Not every broker allows binary trading. Therefore, even if you have a trading account with a broker, you may not be able to use the same broker to trade Binary Options.

You will need to select a specialized binary options broker to speculate using binaries. While most of these brokers allow clients from most countries to use their platform, there is a chance that the broker you pick does not allow traders from your country to trade on its platform.

You must bear this in mind and ensure that the broker will allow you to use its platform before making an account. It will save you a lot of time and effort.

Furthermore, it is critical that you pick a well-regulated broker that allows trading binaries on assets and markets that you’re familiar with. Switching to trading assets in markets you aren’t familiar with increases the chances of losing money when trading. Finally, you must make sure that the broker you choose offers its services at competitive prices. Paying slightly higher fees may not seem like a problem; however, the costs add up to a large amount as you make more and more trades.

With these factors in mind, we’ve shortlisted three of the best Binary Options brokers in the market that you can sign up with.

#1 Pocket Option

Founded in 2017, Pocket Option is another recognized brokerage that allows binary trading. With a straightforward binary options registration process, over 130 assets to trade, and service availability in most countries, it is hard to pick other brokers over Pocket Option.

While the minimum deposit is $50, the minimum trade value is $1. But perhaps the best thing about the platform is that you can sign up and use the demo account free of charge without any commitment. This makes it the perfect platform for novice traders.

The platform also offers features like social trading and hosts tournaments with varying prizes. The easy-to-use web interface and mobile app make trading convenient.

(Risk warning: You capital can be at risk)

#2 Quotex.io

Quotex.io is a relatively new Binary Options broker – it was founded in 2020. But it has quickly established itself as a leading brokerage. In addition, it is a member of the IFMRRC, making it a regulated and trustworthy platform for traders.

The platform imposes payment restrictions on traders from the US, Canada, Hong Kong, and Germany. However, as long as you’re over 18 years of age, you can fund your account using cryptocurrency and trade.

The minimum deposit amount is $10, and the platform also offers a demo account, enabling traders to practice trading in real-time without having to risk any capital. The yield can be very high up to 90%+. Personally, I like this trading platform, it works smoothly and fast.

(Risk warning: Your capital can be at risk)

#2 IQ Option

IQ Option is one of the most popular Binary Options brokers, with over 40 million registered users. The brokerage is regulated by the Cyprus Securities and Exchange Commission and also the Seychelles Financial Services Authority.

It is considered the go-to broker by novice traders globally. The minimum deposit is $10 for a standard account. You instantly get access to over 350 assets to trade. The brokerage also offers VIP accounts with additional benefits to traders that deposit more than $1900 in two days.

There are no deposit bonuses, but the brokerage holds several trading tournaments. Winners receive prizes ranging from $100 to $100,000.

IQ Option is available in over 213 countries. However, the platform is not available for traders in countries like the United States, Russia, Canada, Australia, France, Japan, Belgium, and some middle-eastern countries, due to the stricter regulations.

Like Quotex.io, IQ Options also offers a demo account that you can use to practice trading strategies without any risk.

(Risk warning: You capital can be at risk)

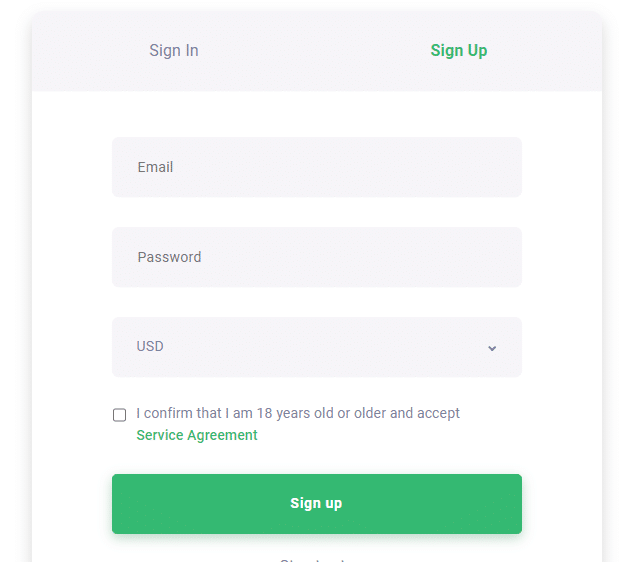

Step #3: Open an account and fund it

After you pick one from the many binary options brokers, you must then make an account with them. To open an account with any broker, you will need to meet the brokerage’s account requirements. You will need to supply your:

- First and last name

- Country of residence

- Preferred trading currency

- Preferred payment method

- Email address and

- Password

Some brokerages also ask for your phone number. It may be used to confirm your identity, for two-factor authentication, or for providing you support over the phone if you need it.

While most brokers do not ask for your payment information during the binary options registration process, you may need to input it.

Verifying your identity:

After you supply your basic information, the broker will ask you to verify your identity. This requires you to upload a copy of your state ID to the broker’s secure portal. Some brokerages also need clients to attend a video call for identity verification.

Brokerages verify every trader’s identity to prevent several two things:

- Fraud: Individuals under 18, traders living in certain countries are not allowed on the platform to avoid potential fraud.

- Identity theft: Verifying all users of the platform reduces the chances of hackers stealing and successfully impersonating a user.

Verification processes usually take under three days. The inconvenience of waiting to get verified by the brokerage goes a long way in keeping both the platform and the traders safe.

That said, some brokers do not require verification and allow you to start trading right after you enter your basic information and set a password.

(Risk warning: Your capital can be at risk)

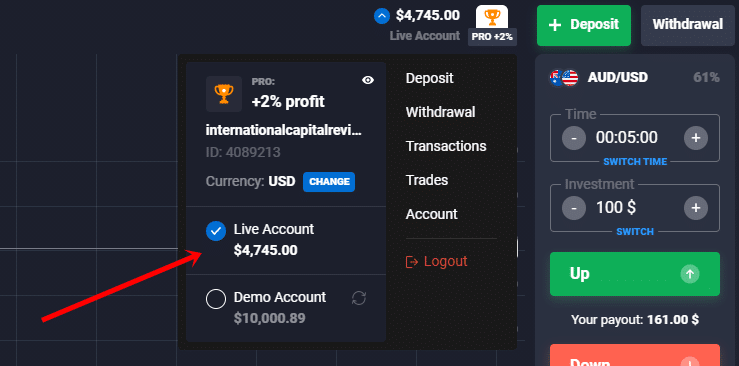

Step #4: Use your Binary Options trading demo account or live account

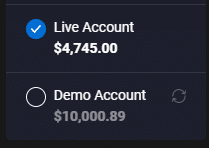

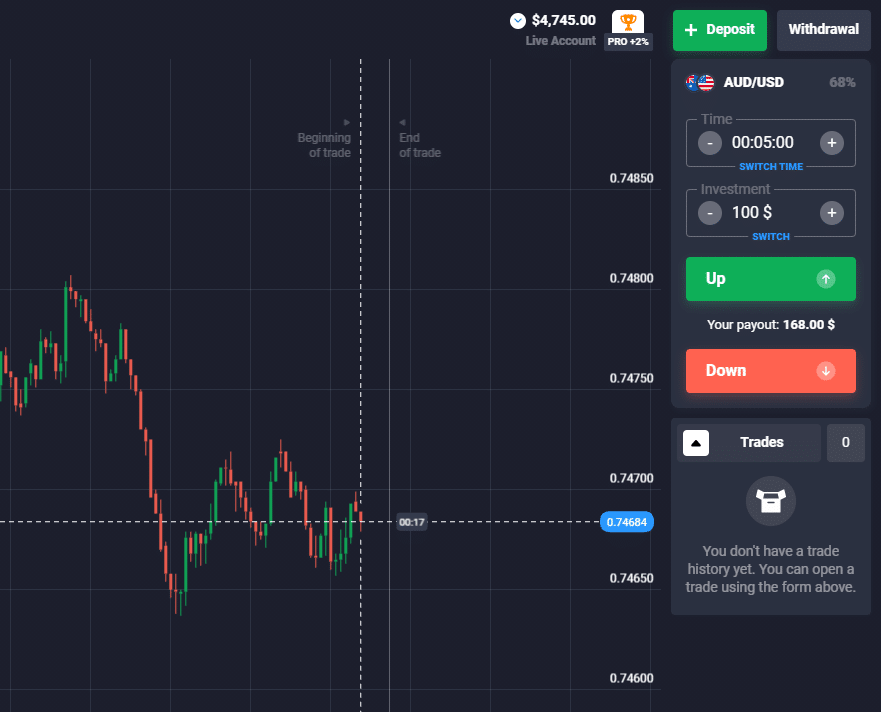

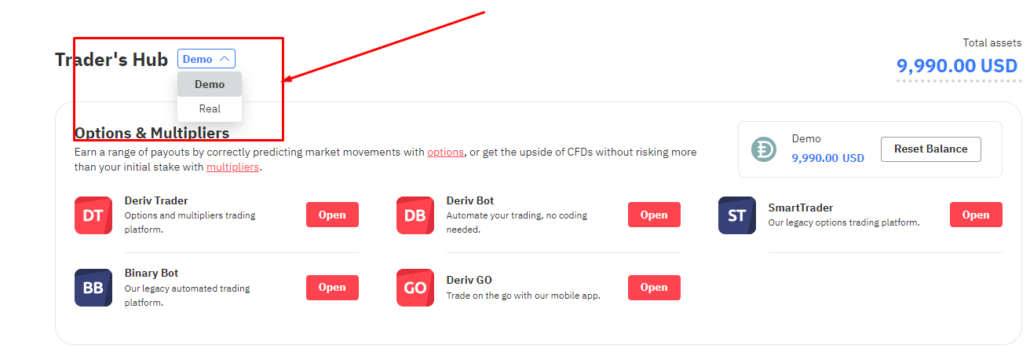

The majority of Binary Options brokers give you access to two accounts: a live account and a demo account. The demo account enables you to place trades with “demo” money in real time.

There is no need to download and install software to use this account. When you access it, you will be redirected to a page that gives you access to the platform with the demo money.

Demo accounts are used to practice trading strategies without needing to spend real capital. Using a demo account is the most convenient way to test strategies and learn how to trade if you’re a beginner.

To use the live account, you must fund it using methods like a wire transfer. Then, you can use the funds in the trading account to make trades on the live market.

Normal account vs managed trading account

The binary options account enables you to trade financial derivates on the broker’s platform but there is also the chance of getting a managed binary options account. This account type is managed by a professional who takes care of your investments or gives investment advice. From our research, it can be very risky to take a managed account because there are mostly no professionals in the broker’s company who can do profitable trading.

Different Binary Options account types explained:

Choosing an account type is challenging, especially when you do not know what type of trading you should do. Once you have ascertained that, it will become easier to choose the account type. Here are the most prevalent account types for binary options trading.

The virtual account (demo)

Have you just started your investment journey but are too afraid to invest real money? Don’t worry, as virtual accounts can help you remove this fear. Before investing in binary options with real money, you can test your strategies through a virtual account (demo account). It will help boost your confidence in trading and analyzing binary options.

A virtual account has similar properties as a real account. However, a virtual account does not allow the usage of real money, unlike a real one.

You can still invest and see what the result would have been if you had used real cash. In the end, the possibility of winning and losing actual money is nil. Hence, it is a risk-free way of trading.

Savvy investors prefer using virtual accounts to test the honesty of the forum. This way, the traders know that the information shown on the platform is reliable and trustworthy. When trading with actual money, you need to have a solid winning technique. These virtual accounts help you to develop strategies of your own. Having complete knowledge of the platform is a must. For instance, some binary options have an expiration time of mere seconds. Hence, reducing latency can mean the difference between win and loss.

The low minimum account

So, now you have a dependable winning strategy and are ready to start investing with real money. Low minimums are the perfect choice for you and any other beginner who wants to start investing real cash. Investors using the low minimum account can use and enjoy full trading privileges. But this comes with a catch. Investors possessing these have a low account balance than usual.

Standard accounts for binary options usually have an account balance of around $500. Anything below it is represented as a low minimum account. Although, this categorization may also depend on your broker. These include Micro, Basic, or Beginner account.

Due to the less risk involved, it also comes with downfalls. You will be stripped of immense investment opportunities and other privileges. The account holders cannot use leverage for the simple reason that they don’t have enough funds. It may also cause a disadvantage when high-risk opportunities or options with a remarkably shorter time frame evolve.

Even though the risks in these opportunities are high, they reap greater rewards. Once you upgrade your account, all these privileges and investment opportunities get added to your repertoire.

The exotic trades account:

Did you ever think that you would be able to trade on what will be the weather conditions in Texas a week from now? Exotic trades allow you to do just the same. From price determination to weather conditions, you can bid on anything.

Exotic trading lets you bid on these types of binary options. For example, you can bid on the future weather conditions of a particular place or the interest rates announced by the Federal Reserve.

The exotic trades account gives you access to different option types, which proves to be beneficial for your investment journey.

The list of most popular trade option kinds is given below:

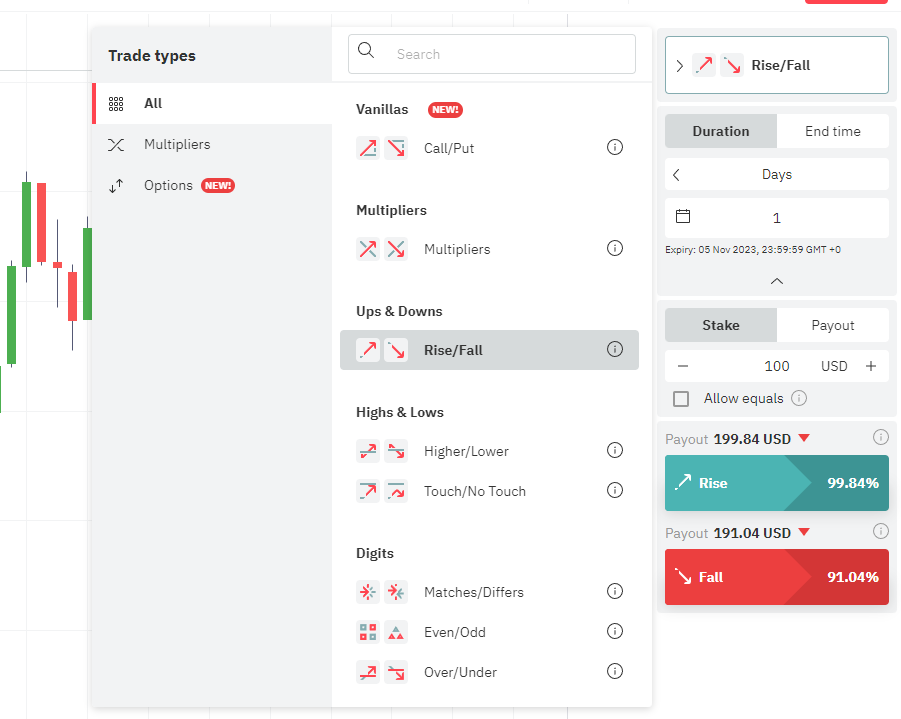

High/Low

It is the most basic type of binary options trading. In this, you look into an asset which can be a stock, currency, commodity, or index. The platform then asks you a question:

“Will the X asset’s value be higher or lower than its current price when Y time expires?”

You simply choose ‘high’ or ‘low’ based on your opinion. If you are correct with your assertion and the asset trades exactly at the level you emulated when the clock runs out, you win the bid. Otherwise, it’s a loss.

In/out

It can essentially be termed as ‘Double No-touch trades.’ You foreshadow whether the asset’s price will remain in a specific range. If the price stays within the specified range when the time expires, you win the trade and vice versa.

Ladder

Ladder trades are relatively a newer invention. It can be a bit complicated to execute and earn profits from it. Ladder trading is similar to high/low and One Touch trades. You are still predicting which direction an asset’s value will go and within which timeframe.

Although, in ladder trades, you break down the trading into parts, which allows you to have partial wins and losses. It usually gets executed by professional traders and may take some time for you to earn profits from this type.

Touch/No-Touch

The type of trading asks you a simple question:

“Will this X asset reach the Y trigger point at the Z expiry time?”

All you have to do is emulate whether it will touch the trigger point or not. Remember that, in touch trades, the further the trigger point is from the current price, the higher the payouts. It is the exact opposite of no-touch. Hence, if your assertion is correct, you win the trade. Otherwise, you will lose your investment.

The professional account

As the name hints out, these accounts are usually meant for sophisticated traders. This account has the most risk than any of the account types discussed. These investors have high average account balances and have multiple trades going on simultaneously.

Professional traders try to use these binary options as hedges against each other. If this strategy is used efficiently, it can give high returns, but misuse can lead to huge losses.

It permits the user to have the highest levels of leverage.

As mentioned already, leveraged accounts allow the trader to invest exponentially more than the existing account balance. Although, if the investor loses the trade, they can lose more than the value of their account and end up owing money to the broker in this scenario.

These accounts allow the pro investors to access the full range of trades. Depending upon their trading style, they can participate in high-frequency trades or put bets on any exotic assets. Certain brokers help the holders of professional accounts by providing personalized assistance. It may include a concierge and access to proprietary educational materials.

Conclusion: Open a Binary Options account is easy and fast

Depending on the broker you sign up with, Binary Options registration may take anywhere from a few hours to several days.

It is important for you to note that if a broker doesn’t require identity verification in a specific way, it doesn’t mean the broker is any less reliable. Most Binary Options brokers are reliable, and the chances of you signing up with an inimical broker are very low.

Now that you know how to complete binary options registration, you can quickly finish the sign-up process and start trading.

(Risk warning: Your capital can be at risk)

Frequently asked questions about Binary Options account:

What are the possible results of placing a Binary Options trade?

If your prognosis about the direction of price movement was right, the stipulated profits would be credited to your account.

If the option expires and your prognosis is wrong at expiration, you will lose the amount you invested.

If there’s zero change in the price, you will receive the amount you invested back.

Your losses are limited to the size of the asset value.

Do I have to fund my trading account at registration?

You will need to fund your account with at least the minimum deposit. The minimum deposit varies from brokerage to brokerage. It is typically $50 or less, but some brokers have a higher minimum deposit requirement.

Can I trade Binary Options from my phone?

If the broker you sign up with has an app available on the Google Play Store or the App Store, you will be able to trade binaries on your phone without much hassle. Most brokers have mobile-friendly websites, so you should be able to trade on your phone by visiting the site on your browser if an app isn’t available.

Can I close my Binary Trading account later down the road?

Yes, it is easy to delete your account from a trading platform. Most trading platforms have a “Delete Account” option on their page. However, some require you to contact the customer support team to delete your account.

How much money do demo accounts have?

Typically, demo accounts come with 10,000 units of your preferred currency. That said, the “standard” funds in the demo account differ from platform to platform.